In short

- Experts tell Decrypt The upcoming GPT-5 will have extensive context windows, built-in personalization and real multimodal skills.

- Some believe that GPT-5 will cause an AI revolution warning of another incremental step with new limitations.

- Experts think that the recent talent migration of OpenAI can influence the future plans, but not the upcoming release of GPT-5.

Look out opaai’s GPT-5 is expected to fall this summer. Will it be an AI blockbuster?

Sam Altman confirmed the plan in June during the first podcast episode of the company and mentioned casually that the model – of which he said it will merge the possibilities of his earlier models – will probably arrive somewhere this summer “.

Some OpenAi Watchers predict that it will arrive within a few weeks. An analysis of OpenAi’s model release history noted that GPT-4 was released in March 2023 and GPT-4-Turbo (Die Power Chatgpt) came later in November 2023. GPT-4O, a faster, multimodal model, was launched in May 2024. This means that OpenAi focused faster and ittering models faster.

But not fast enough for the brutal fast-moving and competing AI market. In February, asked on X when GPT-5 were released, Altman said “weeks/months.” For weeks have indeed become months, and in the meantime competitors have quickly closed the gap, with meta who spend billions of dollars in the last 10 days to poach some of the best scientists in OpenAi.

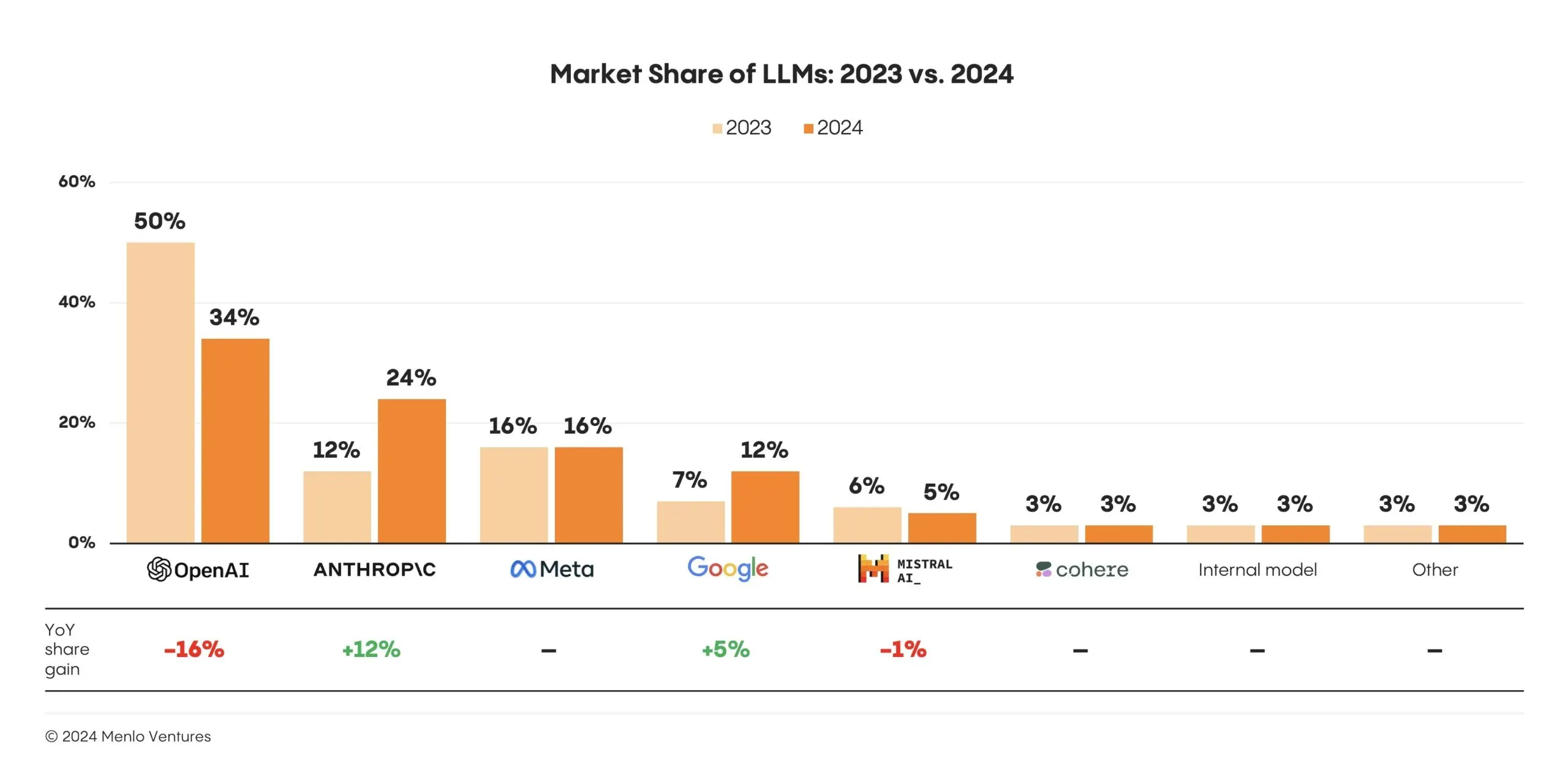

According to Menlo Ventures, the market share of OpenAi fell from 50% to 34%, while anthropically doubled from 12% to 24%. Google’s Gemini 2.5 Pro absolutely destroyed the competition in mathematical reasoning, and Deepseek R-1 became synonymous with “revolutionary”-the cover of alternatives to closed source-and even Xai’s grok (previously known for its “Fun Mode” configuration) was taken seriously.

What to expect from GPT-5

The upcoming GPT model, according to Altman, will effectively be one model to rule them all.

GPT-5 is expected to unite the different models and tools from OpenAI in one system, which eliminates the need for a “model selector”. Users no longer have to choose between different specialized models – a system will process text, images, audio and possibly video.

Until now, these tasks are divided between GPT-4.1, Dall-E, GPT-4O, O3, advanced voice, vision and Sora. Concentrating everything on a single, real multimodal model is a pretty big performance.

GPT 5 = Level 4 on AGI scale.

Now Compute is everything needed to multiply X1000 and they can work autonomously on Organizatzions.

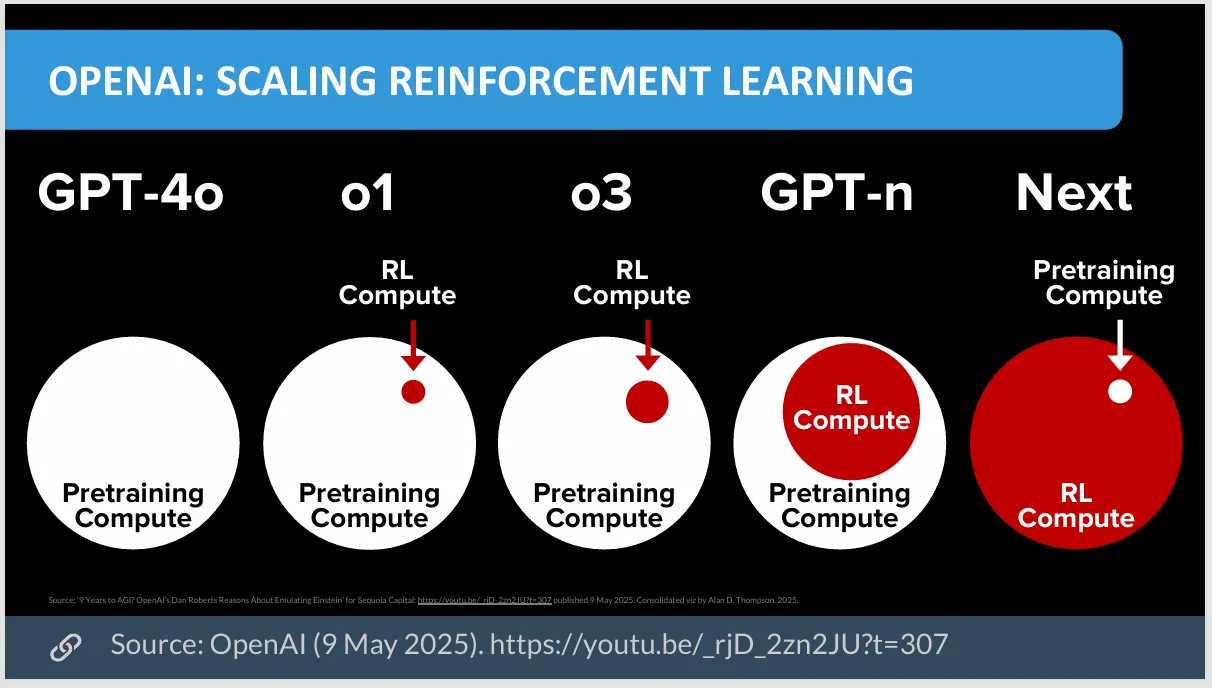

“Sam discusses the future development direction;” GPT-5 and GPT-6, […]Will use reinforcement and will be as discovering … https://t.co/Ewhrzremey pic.twitter.com/UPS0AMUFJY

– Chubby♨️ (@kimmonismus) February 3, 2025

The technical specifications also look ambitious. The model is expected to contain a considerably extensive context window, possibly more than 1 million tokens, and some reports speculate that it will even reach 2 million tokens. For the context, GPT-4O maximizes a maximum of 128,000 tokens. That is the difference between processing a chapter and digesting an entire book.

In 2024, OpenAi started rolling out experimental memory functions in GPT-4-Turbo, allowing the assistant to remember details as the name of a user, tone preferences and current projects. Users can view, update or delete these memories that have been gradually built over time instead of based on some interactions.

In GPT-5, the memory is expected to be deeper and seamlessly will be the model, after all, almost 100 times more information about you will be able to process, with possibly 2 million tokens instead of 80,000. That would enable the model to call up conversations weeks later, to build up contextual knowledge over time and to offer continuity more on a personalized digital assistant.

Improvements in the reasoning of sound equally ambitious. This progress is expected to manifest itself as a shift to “structured overday chain” processing, so that the model can dissect complex problems in logical, multi-step sequences, which reflect human deliberative thinking processes.

As far as the parameters, consensus rumors drive everything from 10 to 50 trillion, to a head of a quadriljoen. As Altman himself said: “The era of Paramerneschaling is already over”, while AI training techniques shift the focus from quantity to quality, with better learning approaches that make smaller models extremely powerful.

And this is another fundamental problem for OpenAi: it is no longer internet data to train on. The solution? If AI generates its own training data, which can mark a new era in AI training.

The experts weigh

“The next leap will be synthetic data generation in verifiable domains,” said Andrew Hill, CEO of AI agent on-chain arena recall, said Decrypt. “We touch walls on data on the internet scale, but show the reasoning breach that models can generate high -quality training data when you have verification mechanisms. The simplest examples are math problems where you can check whether the answer is correct and coding where you can perform unit tests.”

Hill sees this as a transformative: “The leap is about making new data that is actually better than data generated because it is iteratively refined by verification loops and is created much faster.”

Benchmarks are another battlefield: AI expert and educator David Shapiro expects the model to reach 95% on MMLU, and Spike from 32% to 82% on Swebench-in the framework of an AI model on God. If even half are true, GPT-5 will get the headlines. And internally there is really trust, with even some OpenAI researchers who hypnotize the model before release.

It is wild to see how people now use chatgpt, knowing what is coming.

– Tristan (@itstkai) June 12, 2025

Don’t believe the hype

Experts Decrypt Interviewee warned that everyone who expects GPT-5 would achieve competence agi, should contain their enthusiasm. Hill said he expected a “incremental step, disguised as a revolution.”

Wyatt Mayham, CEO of Northwest AI Consulting, went a little further, the predicting of GPT-5 would probably be ‘a meaningful jump instead of an incremental’, and adds: “I would expect longer context windows, more native multimodality and shifts in how agents can act and reasons.

With every two steps forward one comes in retreat, Mayham said: “Every major release dissolves the most obvious limitations of the previous generation while they introduce new ones.”

GPT-4 Fixed GPT-3’s reasoning holes, but hit data walls. The reasoning models (O3) have solved logical thinking, but are expensive and slow.

Tony Tong, CTO at Intellectia AI – a platform that offers AI insights for investors – is also careful and expects a better model, but not something that changes the world as many AI hypers do. “My bet is that GPT-5 will combine a deeper multimodal reasoning, better grounding in tools or memory, and major steps forward in coordination and agent behavioral control,” Tong said Decrypt. “Think of: more verifiable, more reliable and more adaptive.”

And Patrice Williams-Lindo, CEO of Career Nomad, predicted that GPT-5 will not be much more than an ‘incremental revolution’. However, she suspects that it might be especially good for daily AI users instead of business applications.

“The compound effects of reliability, contextual memory, multimodality and lower error percentages can be to change how people trust and use these systems every day. This can be a huge victory in themselves,” said Williams-Lindo.

Some experts are simply skeptical that GPT-5 or any other LLM will be remembered.

AI researcher Gary Marcus, who has been critical of pure scale approaches (better models need more parameters), wrote in his usual predictions for the year: “There could be no ‘GPT-5-level’ model (which means huge, across the Line Kwantum jumped forward as assessed by community consensus) in 2025.” “

Marcus based on upgrade announcements instead of brand new fundamental models. That said, this is one of his councils with little confidence.

The Brain Drain from billion dollars

Whether Mark Zuckerberg’s raid on OpenAi’s Brainrust slows the launch of GPT-5, is a gamble of someone

“It absolutely slows down their efforts,” said David A. Johnston, main code maintenance at the decentralized AI Network Morpheus, said Decrypt. In addition to money, Johnston believes that the top talent is morally motivated to work on open-source initiatives such as Llama instead of alternatives with a closed source such as Chatgpt or Claude.

Yet some experts think that the project has already evolved in such a way that talent drain will not influence this.

Mayham said that the “July 2025 release looks realistic. Even with an important talent that moves to Meta, I think OpenAi still looks like schedule. They have retained core leadership and adapted comp, so that they are stuck, it seems.”

Williams-Lindo added: “OpenAi’s Momentum and Capital Pipeline are strong. What is impact is not who left, but how those who remain priorities re-calibrate-especially if they double on productization or pause to tackle safety or legal pressure.”

If history is a guide, the world will soon get its GPT-5 withdrawal, along with a flurry of headlines, hot takes and “Is that all?” Moments. And then so, will the entire industry begin to ask the next big question that matters: when GPT-6?

Generally intelligent Newsletter

A weekly AI trip told by Gen, a generative AI model.