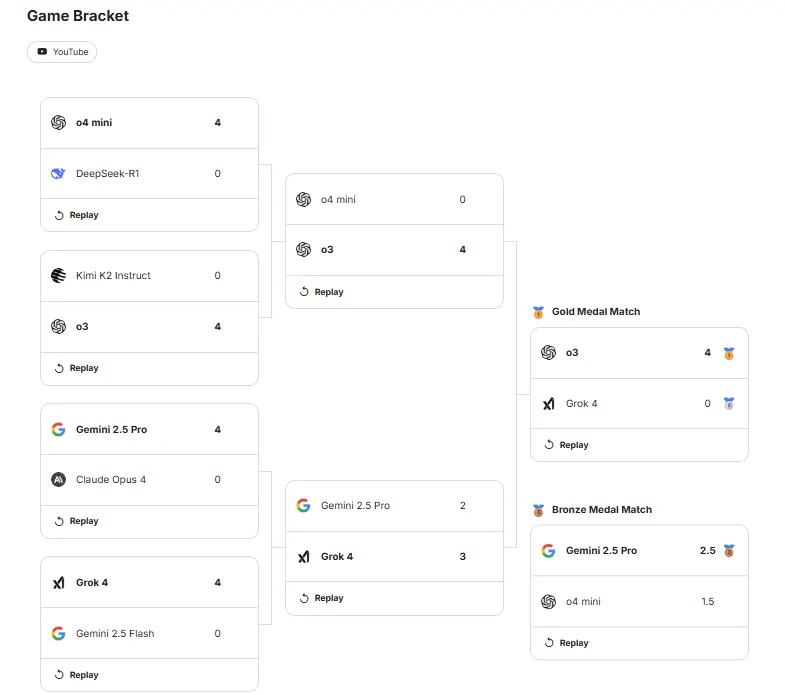

The OpenAI O3 model of Sam Altman-Dat at the end of last week was abolished with the release of GPT-5-Demolde Thursday of Elon Musk’s Grok 4 in four consecutive games to win Google’s Kaggle Game Arena Ai Chess Exhibition.

You may think that it was a super complex spectacle of high -tech colosses that put their reasoning on the ultimate test, but as a starter, say that world champion Magnus Carlsen compared both bots with “a talented child who doesn’t know how the pieces move”.

The three-day tournament, which ran 5-7 August, forced general chatbots-ja, the same that help you write e-mail and claim that you approach intelligence at human level without specializing training. No chess engines, non -watching movements, only what chess knowledge they have randomly absorbed from the internet.

The results were about as elegant as you would expect from forcing a language model to play a board game. Carlsen, who co-commented the final, estimated that both AIs played at the level of casual players who recently learned the rules round 800 ELO. For the context, he is perhaps the best chess player who has ever lived, with an ELO of 2839 points. These AIs played as if they had learned chess from a damaged PDF.

“They oscillate between real, really good play and incomprehensible sequences,” said Carlsen during a broadcast after the game. At one point, after he had seen his king in danger directly, he joked that it would think that they played King of the Hill instead of chess.

The actual games were like a master class in how you don’t chess, even for those who don’t know the game. In the first game, Grok essentially gave one of his important pieces away for free and then made it worse by exchanging more pieces while they are already behind.

Game two became even stranger. Grok tried to perform which chess players call the “poisoned pawn” – a risky but legitimate strategy where you grab an enemy pawn that looks pretty but not. Except that Grok took the wrong pawn completely, one that was clearly defended. The queen (the most powerful piece on the board) was stuck and immediately captured.

In game three, Grok had built, which looked like a solid position – good positional control, no clear dangers and in fact a setup that can help you win the game. Then, in the middle of the game, it actually rumbled the ball directly to the opponent. It all quickly lost after each other.

This was actually strange, given that Grok was a pretty strong competition before the match against O3, who showed a solid potential – so much that the chess -grinder Hikaru Nakamura praised it. “So far, grok is easy to do the best, just objective, easy the best.”

The fourth (and last) competition offered the only real tension. Openai’s O3 made a huge blunder early in the game, which is a big danger in every reasonable game. Nakamura, who streamed the match, said there were still “a few tricks” for O3 despite the disadvantage.

He was right – O3 clawed back to win back his queen and slowly squeezed a victory while the end game of grock fell apart as a wet cardboard.

“Grok made so many mistakes in these games, but OpenAi didn’t,” said Nakamura during his live stream. This was quite the reversal from earlier in the week.

The timing could not have been worse for Elon Musk. After the strong early rounds of Grok, he had posted on X that his AI’s chess skills were only a “side effect” and that Xai “had almost made almost no trouble chess.” That turned out to be an understatement.

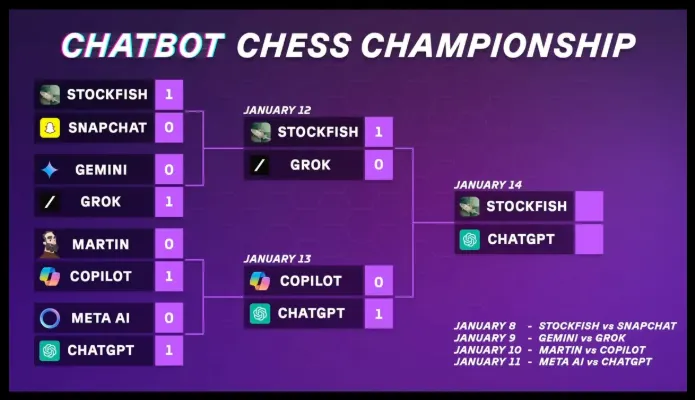

Before this “official” chess tournament, international master Levy Rozman organized his own tournament earlier this year with less advanced models. He respected all the movements that the recommended chatbots, and the whole situation eventually became a complete mess with illegal movements, play holidays and incorrect calculations. Stockfish, an AI that was built especially for chess, eventually won the tournament against Chatgpt. Altman’s AI was matched against Musk’s in the semi -final and lost grock. So it’s 2-0 for Sam.

However, this tournament was different. Each bot had four chances to make a legal move – if they failed four times, they automatically lost. This was not hypothetical. In early rounds, AIS tried to teleport pieces across the board, bring dead pieces back to life and to move pawns sideways as if they were playing a fever-dream version of chess they had invented themselves.

They are disqualified.

Google’s Gemini took third place by beating another OpenAI model, and saving some dignity for the tournament organizers. That bronze medal match contained a particularly absurd game in which both AIS won completely at different points, but could not find out how to end up.

Carlsen pointed out that the AIs were better in counting caught pieces than actually delivering checkmate – they understood equipment benefit but not how to win. It is as if you are good at collecting ingredients, but unable to cook a meal.

These are the same AI models that, according to technical managers, claim that they approach human intelligence, threaten white borders and bring about a revolution in how we work. Yet they cannot play a board game that exists 1500 years without trying to cheat or forget the rules.

So it is probably safe to say that we are safe, AI will take over control of humanity for the time being.

Generally intelligent Newsletter

A weekly AI trip told by Gen, a generative AI model.