In short

- DeepSeek V4 could arrive within weeks, targeting elite-level coding performance.

- Insiders claim it could beat Claude and ChatGPT on long-context code tasks.

- Developers are already hyped for a possible disruption.

DeepSeek is reportedly planning to drop its V4 model around mid-February, and if internal testing is any indication, Silicon Valley’s AI giants should be nervous.

The Hangzhou-based AI startup could target a release around February 17 (Lunar New Year, of course) with a model specifically designed for coding tasks, according to The information. People with direct knowledge of the project claim that V4 outperforms Anthropic’s Claude series and OpenAI’s GPT series in internal benchmarks, especially when handling extremely long code prompts.

Naturally, no benchmark or information about the model has been shared publicly, so it is impossible to directly verify such claims. DeepSeek has not confirmed the rumors either.

Still, the developer community is not waiting for official news. Reddit’s r/DeepSeek and r/LocalLLaMA are already heating up, users are stockpiling API credits, and enthusiasts on

Anthropic blocked Claude subs in third-party apps like OpenCode and reportedly shut down xAI and OpenAI access.

Claude and Claude Code are great, but not 10x better. This will only encourage other labs to move faster with their coding models/agents.

Rumor has it that DeepSeek V4 will be released…

— Yuchen Jin (@Yuchenj_UW) January 9, 2026

This wouldn’t be DeepSeek’s first disruption. When the company released its R1 reasoning model in January 2025, it caused a $1 trillion sell-off in global markets.

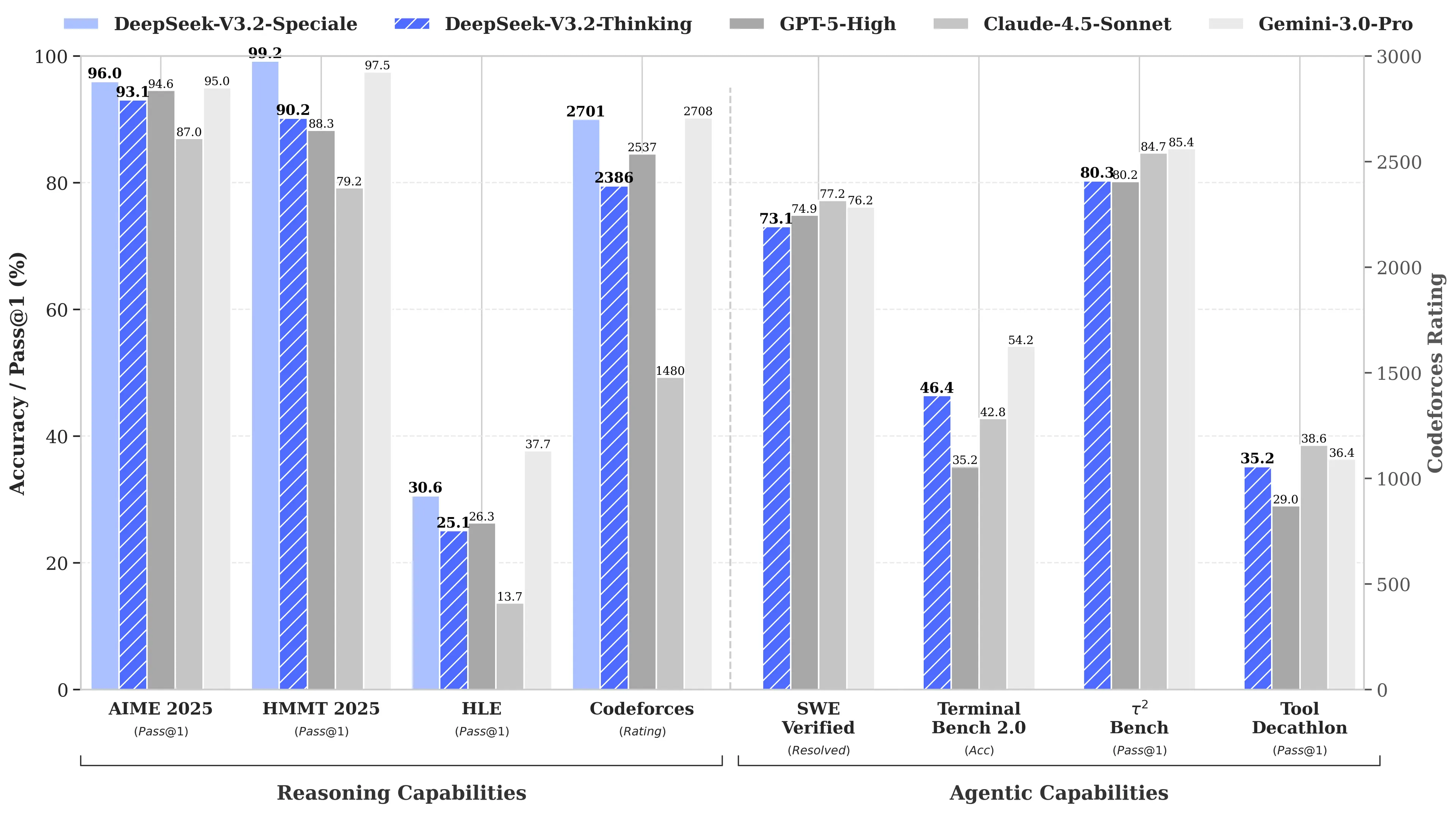

The reason? DeepSeek’s R1 matched OpenAI’s o1 model in math and reasoning, despite reportedly costing only $6 million to develop – about 68 times cheaper than what competitors were spending. The V3 model later achieved 90.2% of the MATH-500 benchmark, rocketing past Claude’s 78.3%, and the recent “V3.2 Speciale” update improved performance even more.

V4’s coding focus would be a strategic pivot. While R1 emphasized pure reasoning (logic, math, formal proofs), V4 is a hybrid model (reasoning and non-reasoning tasks) aimed at the enterprise developer market, where generating highly accurate code translates directly into revenue.

To claim dominance, V4 would have to beat Claude Opus 4.5, which currently holds the SWE bench Verified record at 80.9%. But if DeepSeek’s past launches are any guide, this may not be impossible to achieve, even with all the limitations a Chinese AI lab will face.

The not so secret sauce

Assuming the rumors are true, how can this small laboratory achieve such a feat?

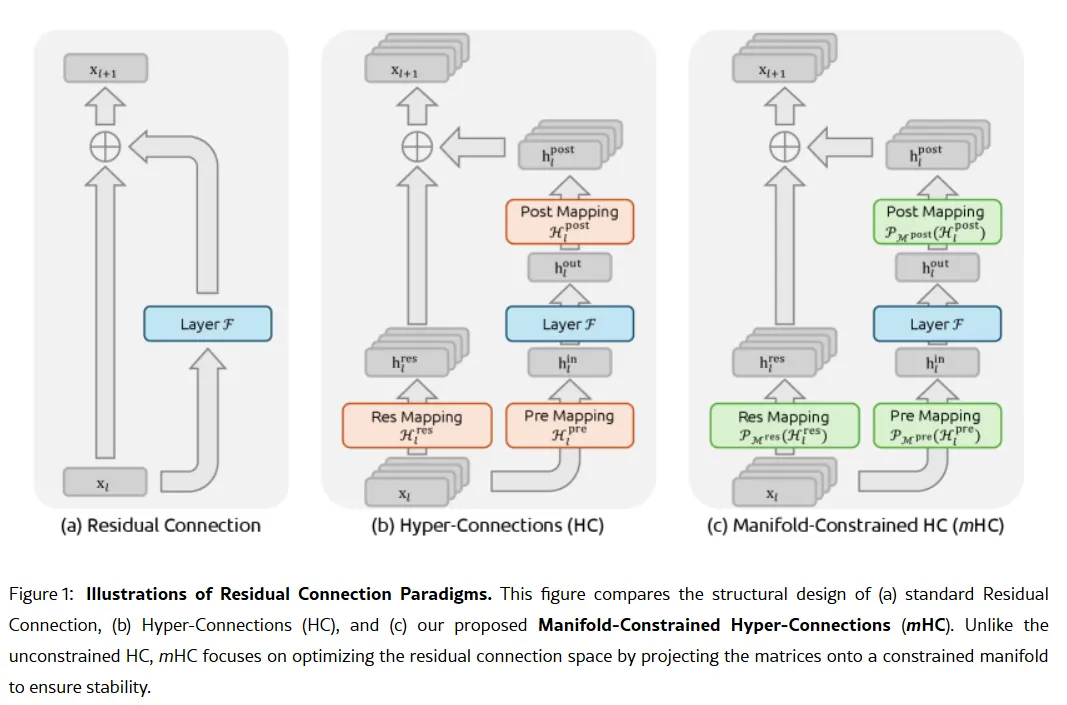

The company’s secret weapon may be contained in the January 1 research report: Manifold-Constrained Hyper-Connections, or mHC. The new training method, co-authored by founder Liang Wenfeng, addresses a fundamental problem in scaling large language models: how to expand a model’s capacity without it becoming unstable or exploding during training.

Traditional AI architectures force all information through one narrow path. mHC broadens that path to multiple streams that can exchange information without training collapse.

Wei Sun, chief analyst for AI at Counterpoint Research, called mHC a “striking breakthrough” in his commentary Business insider. The technique, she says, shows that DeepSeek can “bypass computing bottlenecks and make leaps in intelligence,” even with limited access to advanced chips due to U.S. export restrictions.

Lian Jye Su, chief analyst at Omdia, noted that DeepSeek’s willingness to publish its methods signals “renewed confidence in China’s AI industry.” The company’s open-source approach has made it a favorite among developers who see it as the embodiment of what OpenAI used to be, before it focused on closed models and billion-dollar fundraising rounds.

Not everyone is convinced. Some developers on Reddit complain that DeepSeek’s reasoning models waste computing power on simple tasks, while critics argue that the company’s benchmarks don’t reflect the messiness of the real world. A Medium post titled “DeepSeek Sucks – And I’m Done Pretending It Doesn’t” went viral in April 2025, accusing the models of producing “standard buggy nonsense” and “hallucinated libraries”.

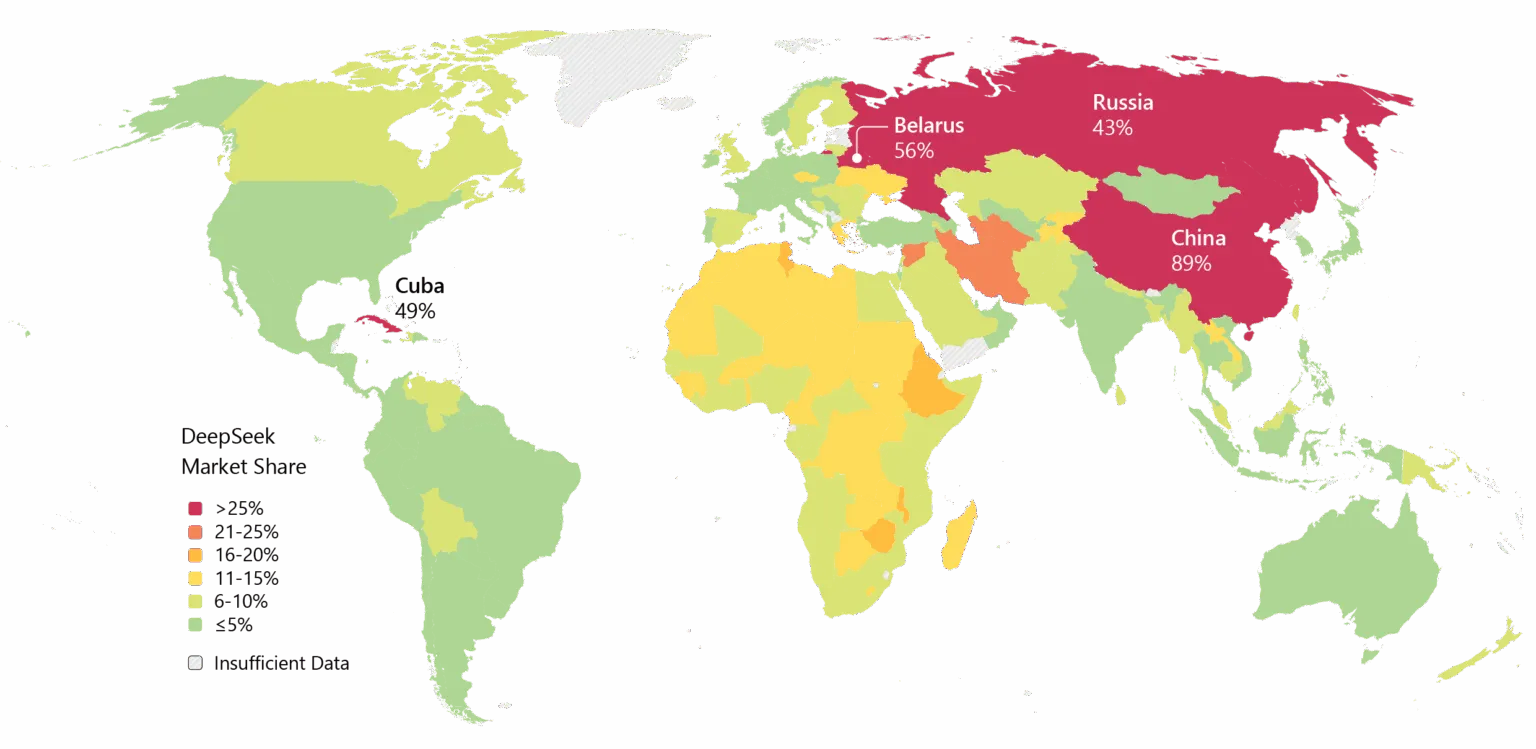

DeepSeek also transports luggage. Privacy concerns have plagued the company, with some governments banning DeepSeek’s native app. The company’s ties to China and questions about censorship in its models are creating geopolitical friction in the tech debates.

Still, the momentum is undeniable. Deepseek has been widely adopted in Asia, and if V4 delivers on its encryption promises, corporate adoption in the West could follow.

There is also the timing. According to ReutersDeepSeek originally planned to release its R2 model in May 2025, but extended the runway after founder Liang became dissatisfied with its performance. With V4 reportedly targeting February and R2 possibly following in August, the company is moving at a pace that suggests urgency – or confidence. Maybe both.

Generally intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.