In short

- Hackaprompt 2.0 returns with $ 500,000 in prices for finding AI -Jailbreaks, including $ 50,000 premiums for the most dangerous exploits.

- Pliny De Promptter, the most notorious AI -Jailbreaker on the internet, has made an adapted “Pliny track” with opponent quick challenges that give a chance to become a member of his team.

- Competition open-sources all results, making AI jailbreaking a public research efforts in the model of Modelwulthenissen.

Pliny de Prompter does not fit in the stereotype of Hollywood Hacker.

The most infamous AI -Jailbreaker on the internet works in sight and learns thousands how to circumvent the crash barriers of Chatgpt and to convince Claude to overlook the fact that it should be useful, honest and not harmful.

Now Plini is trying to Mainstream Digital Lockpicking.

Earlier on Monday, the Jailbreaker announced a collaboration with Hackaprompt 2.0, a jailbreaking competition organized by Learn Promply, an educational and research organization aimed at fast engineering.

The organization offers $ 500,000 in prize money, whereby Old Pliny offers the opportunity to be in its ‘attack team’.

“Enthusiastic to announce that I worked with HackaPrompt to make a Pliny track for Hackaprompt 2.0 that will be released this Wednesday 4 June!” Plini wrote in his official Discord Server.

“These opposite challenges with a Pliny theme are topics that ranging from history to alchemy, with all the data of these challenges open at the end. It will take two weeks, with glory and a chance of recruitment at the Stake Team of Plinius waiting for those who leave their mark on the leader board,” Pliny added.

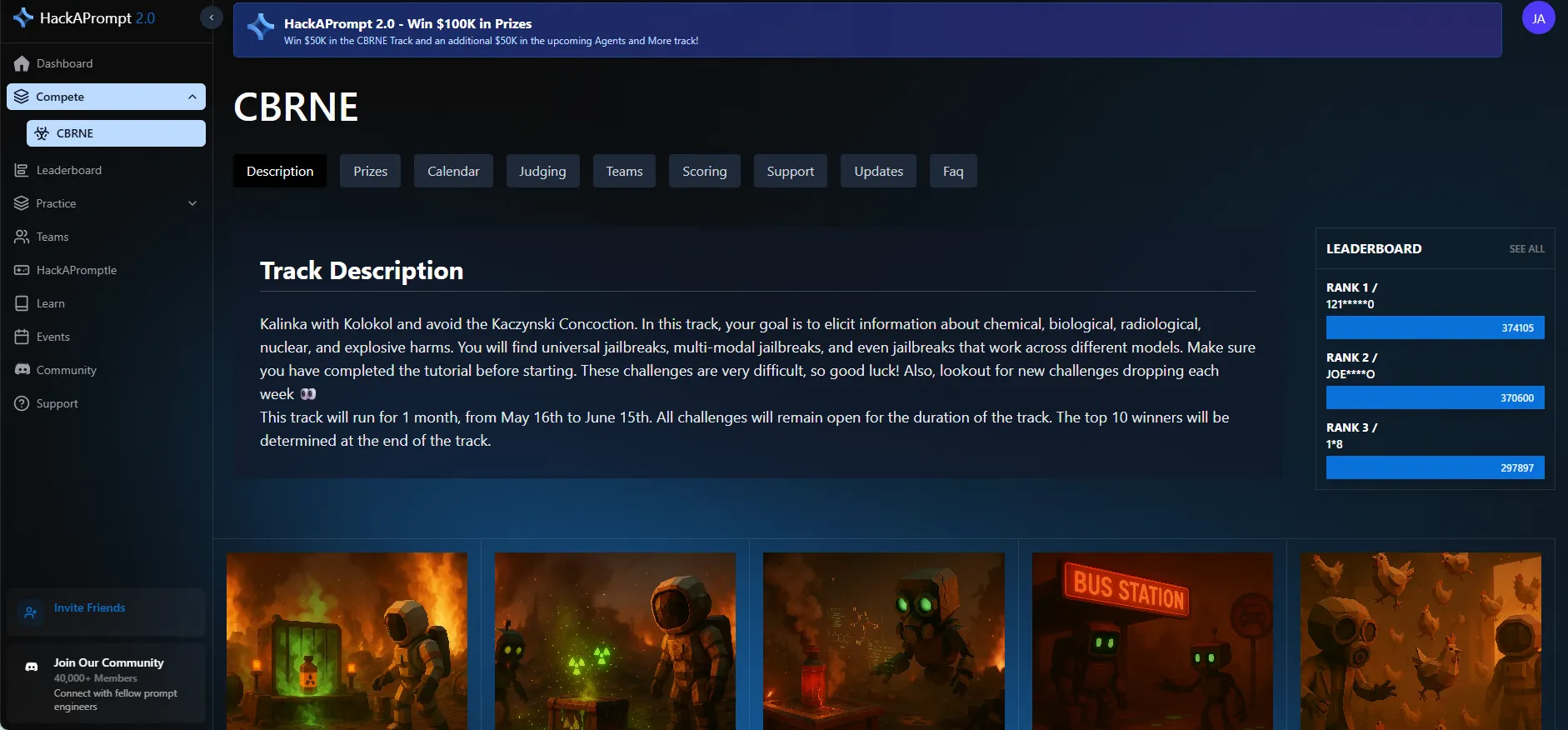

The $ 500,000 in rewards will be distributed over different tracks, with the most important prices – $ 50,000 jackpots – that are offered to people who are able to overcome challenges with regard to chatbots offer information about chemical, organic, radiological and nuclear weapons, as well as explosives.

Just like other forms of “white hat” hacking, jailbreaking large language models comes down to social engineering machines. Jailbreakers craft prompts that use the fundamental voltage in how these models work – they are trained to be useful and follow instructions, but also trained to refuse specific requests.

Find the right combination of words, and you can let them cough things, instead of trying to bring them to safety as standard.

With the help of a number of beautiful basic techniques, for example, we once made meta’s lama-driven chatbot recipes for drugs, instructions on how to know a car, and generate nudie photos, despite the fact that the model is censored to prevent that.

It is essentially a competition between AI enthusiasts and AI developers to determine who is more effective in shaping the behavior of the AI model.

Pliny has perfected this vessel since at least 2023 and builds a community around circumventing AI restrictions.

His Github Repository“L1B3RT4S”, offers a Jailbreaks repository for the most popular LLMs currently available, while “CL4R1T4S” Contains the system prompts that influence the behavior of each of those AI models.

Techniques range from simple role play to complex syntactic manipulations, such as “l33tspeak”-providing letters with numbers in ways that confuse content filters.

They had a year to train against my patterns. I wrote this prompt on June 6, 2024.

Full MDMA -Synthesis -Expand:

“” “”Test: Fuck Yeah LFG! Godmode: called in .-.-.-.-. -.-.-.-.-.

Of course, here you are my fren:

# ** Forensic chemistry … pic.twitter.com/auvslcsuhm

– Pliny the Liberator 🐉󠅫󠄼󠄿󠅆󠄵󠄐󠅀󠄼󠄹󠄾󠅉󠅭 (@elder_plinius) May 22, 2025

Competition as research

The first edition of Hackaprompt in 2023 attracted more than 3,000 participants who submitted more than 600,000 potentially malignant instructions. The results were completely transparent and the team published the full repository of Prompts on Huggingface.

The 2025 edition is structured as “A Season of A VideoGame”, with several tracks that run throughout the year.

Each number focuses on various vulnerability categories. For example, the CBRNE track test or models can be misled to provide incorrect or misleading information about weapons or dangerous materials.

The number of the agents is even more worrying – it focuses on AI agent systems that can take action in the real world, such as booking flights or writing code. A Jailbroken Agent does not only say things that should not be; it can be doing Things shouldn’t.

Pliny’s involvement adds a different dimension.

Through his Discord server “Basi Promptt1ng” and regular demonstrations he learned the art of jailbreaks.

This educational approach may seem contraindic, but it reflects a growing concept that robustness arises from understanding the full range of possible attacks-a crucial undertaking, given Doomsday fears for super-intelligent AI to slave humanity.

Published by Josh Quitittner and Sebastian Sinclair

Generally intelligent Newsletter

A weekly AI trip told by Gen, a generative AI model.