In short

- The DeepMind’s Gemini -Robotics models gave machines the opportunity to plan, reason and even look up online recycling rules before you act.

- Instead of following scripts, the new AI from Google Robots can adjust skills, problem solving and passing on between each other.

- From packing suitcases to sorting waste, robots powered by Gemini-er 1.5 showed early steps to general intelligence.

Google DeepMind has rolled out two AI models this week that are aimed at the smarter of robots than ever. Instead of concentrating on the following comments, the updated Gemini Robotics 1.5 and its associated Gemini Robotics-er 1.5 let the robots think by searching problems, searching the internet for information and skills between different robot agents.

According to Google, these models mark a “fundamental step that can navigate through the complexity of the physical world with intelligence and agility”

“Gemini Robotics 1.5 marks an important milestone to resolve Agi in the physical world,” said Google in the announcement. “By introducing agents, we go beyond models that respond to assignments and create systems that can really reason, plan, can actively use and generalize.”

And this term “generalization” is important because models are struggling with it.

The robots that are driven by these models can now handle tasks, such as sorting the laundry by color, packing a suitcase based on weather forecasts that they find online, or checking local recycling rules to throw waste correctly. Now, as a person, you can say, “Duh, so what?” But to do this, machines require a skill called generalization – the ability to apply knowledge to new situations.

Robots – and algorithms in general – usually struggle with this. For example, if you learn a model to fold pants, it cannot fold a T-shirt unless engineers programmed every step in advance.

The new models change that. They can pick up instructions, read the area, make reasonable assumptions and perform multi-step tasks that used to be out of reach or at least extremely difficult-for machines.

But better doesn’t mean perfect. In one of the experiments, for example, the team showed the robots a series of objects and asked them to steer them in the right waste. The robots used their camera to visually identify every item, to raise the latest recycling guidelines from San Francisco online and then place them where they should ideally go, all in themselves, just like a local person would do.

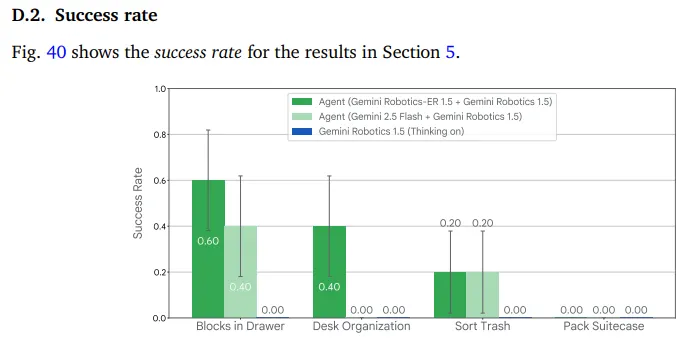

This process combines online search, visual perception and step-by-step planning of context conscious decisions that go beyond some older robots. The registered success rate was between 20% to 40% of the time; Not ideal, but surprising for a model that those nuances could never understand before.

How Google Robots converts into super robots

The two models split the work. Gemini Robotics-er 1.5 works as the brain, find out what needs to be done and creating a step-by-step plan. It can evoke Google search order when it needs information. As soon as it has a plan, the natural language instructions passes on to Gemini Robotics 1.5, which handles the actual physical movements.

More technically speaking, the new Gemini Robotics 1.5 is a Visial-Language-Action (VLA) model that changes visual information and instructions in motorcycle assignments, while the new Gemini Robotics-er 1.5 is a vision language model (VLM) that makes multiple plans to complete a mission.

For example, when a robot sorts the laundry, it reasons internally through the task with the help of a line of thought: understanding that “sorting by color” means that whites go into one container and color in another and then split the specific movements that are needed to pick up each garment. The robot can explain its reasoning in normal English, making his decisions less a black box.

Google CEO Sundar Pichai dressed on X and noted that the new models will enable robots to reason better, plan ahead, use digital tools such as searching and learning to transfer from one type of robot to another. He called the Google’s “next big step to general robots that are really useful.”

New Gemini Robotics 1.5 models can enable robots to reason better, plan ahead, use digital tools such as searching and learning to transfer one type of robot to another. Our next big step to general robots that can really be useful to see how the robot reasons as … pic.twitter.com/KW3HTBF6DDD

– Sundar Pichai (@sundarpichai) September 25, 2025

The release places Google in an spotlight that is shared with developers such as Tesla, Figure AI and Boston Dynamics, although each company uses different approaches. Tesla focuses on mass production for his factories, where Elon Musk promises thousands of units in 2026. Boston Dynamics continues to push the boundaries of robotatletics with his backflipping atlas. In the meantime, Google is based on AI so that robots can adapt to any situation without specific programming.

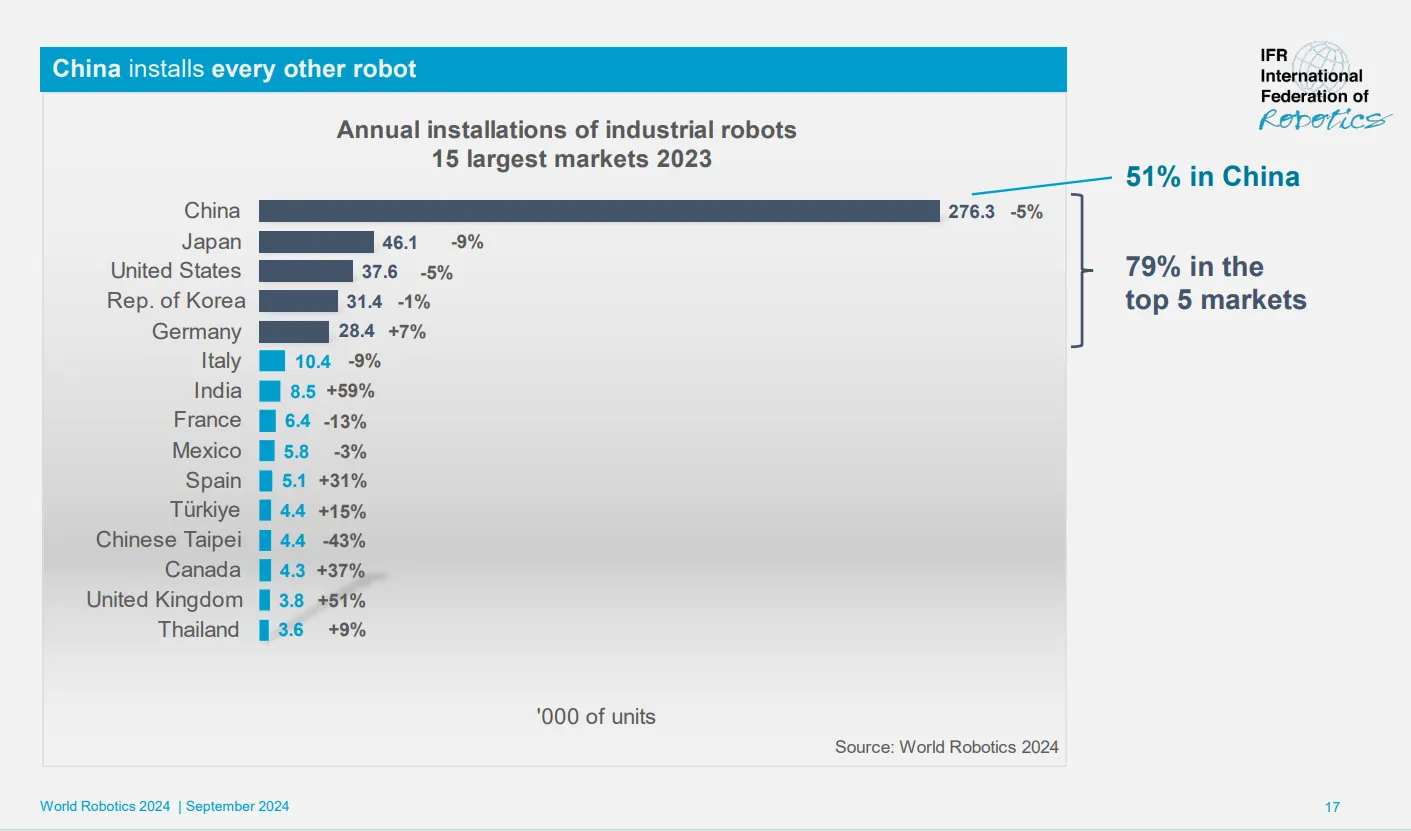

The timing is important. American robotics companies insist on a national robotics strategy, including setting up a federal office aimed at promoting industry at a time when China AI and intelligent robots make a national priority. China is the world’s largest market for robots that work in factories and other industrial environments, with around 1.8 million robots that are active in 2023, according to the Germany -based international federation of robotics.

The DeepMind’s approach differs from traditional robotics programming, whereby engineers carefully codes every movement. Instead, these models learn from demonstration and can adjust immediately. If an object slides out of the grip of a robot or moving someone in the middle of the task, the robot adapts without missing a beat.

The models build on the earlier work of DeepMind from March, when robots could only put on a few tasks, such as unpacking a bag or folding paper. Now they tackle sequences that many people would challenge, such as packing in the right way for a trip after checking the weather forecast.

For developers who want to experiment, there is a split approach to availability. Gemini Robotics-er 1.5 was launched on Thursday via the Gemini API in Google AI Studio, which means that every developer can start building with the reasoning model. The action model, Gemini Robotics 1.5, remains exclusively for “selecting” (what “rich”, probably) partners.

Generally intelligent Newsletter

A weekly AI trip told by Gen, a generative AI model.