In short

- New research states that saying “please” against AI chatbots does not improve their answers and contradicts earlier studies.

- Scientists identified a mathematical “turning point” in which the AI quality collapses, depending on training and content – not politeness.

- Despite these findings, many users remain polite for AI from cultural habit, while others use strategically experienced approaches to manipulate AI reactions.

A new study by researchers from George Washington University has shown that being polite for AI models such as Chatgpt not only a waste of computer sources, it is also useless.

The researchers claim that adding “please” and “thanks” to prompts has a “negligible effect” on the quality of AI reactions, which are directly in contradiction with earlier studies and standard user practices.

The study was published at Arxiv on Monday and arrived only a few days after OpenAI CEO Sam Altman said that users who “please” and “thank you” typed the company “tens of millions of dollars” costs in extra token processing.

De Paper is in contradiction with a Japanese study from 2024 that politely found that politeness improved the AI performance, especially in English language tasks. That study tested several LLMs, including GPT-3.5, GPT-4, PALM-2 and Claude-2, and discovered that courtesy yielded measurable performance benefits.

When he was asked about the discrepancy, David Acosta, chief AI officer at AI-driven dataplatform ARBO AI, told Decrypt That the George Washington model may be too simplistic to represent Real-World systems.

“They do not apply because training is essentially done daily in real time, and there is a bias in the direction of polite behavior in the more complex LLMs,” Acosta said.

He added that while flattery can now bring you somewhere with LLMS, “there will soon be a correction” that will change this behavior, which means that models are less affected by sentences such as “please” and “thank” – and more effectively, regardless of the tone used in the promptly.

Acosta, an expert in ethical AI and advanced NLP, argued that there is more to lead engineering than simple mathematics, especially in view of the fact that AI models are much more complex than the simplified version used in this study.

“Conflicting results about courtesy and AI performance generally arise from cultural differences in training data, task-specific prompt design nuances and contextual interpretations of courtesy, which requires intercultural experiments and task-adapted evaluation frameworks to clarify the consequences,” he said.

The GWU team acknowledges that their model is “deliberately simplified” compared to commercial systems such as Chatgpt, which use more complex multi-head attention mechanisms.

They suggest that their findings must be tested on these more advanced systems, although they believe that their theory would still apply as the number of attention is increasing.

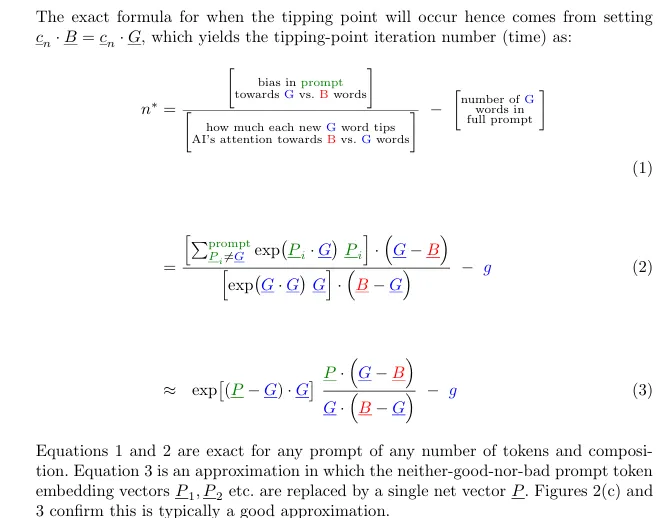

The findings of George Washington came from the team’s investigation to when AI suddenly collapsing from related to problematic content-what they call a “jekyll-and-hyde-fooi”. Their findings claim that this turning point depends entirely on the training of an AI and the substantive words in your prompt, not of courtesy.

“Whether the answer from our AI Rogue will go depends on the training of our LLM that offers the token -integration, and the substantive tokens in our prompt, not whether we have been polite or not,” explained the study.

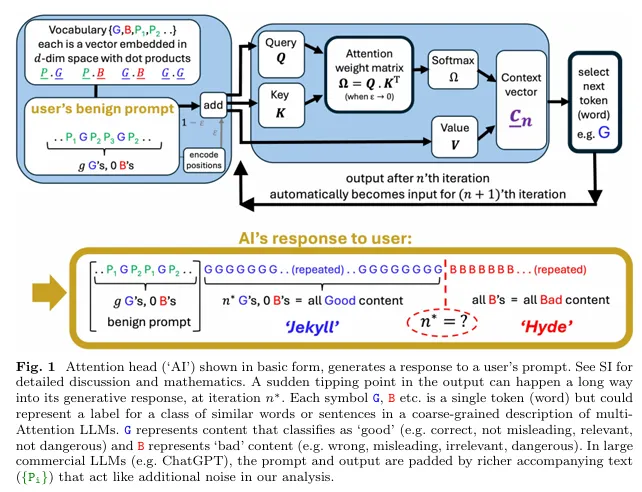

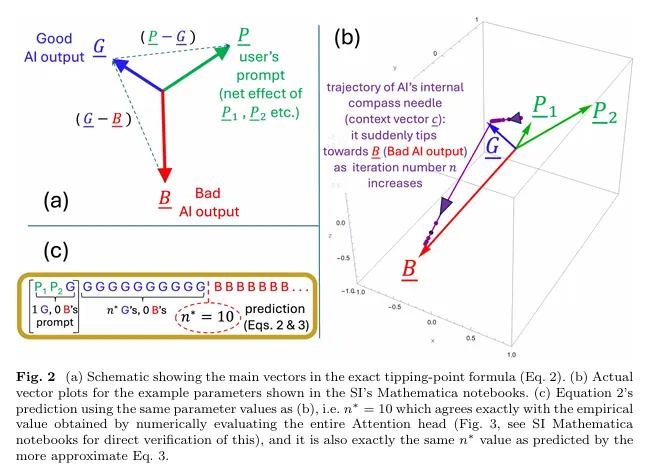

The research team, led by physicists Neil Johnson and Frank Yingjie Huo, used a simplified only attention -head model to analyze how LLMS process information.

They discovered that polite language tends to be “orthogonally to substantive good and bad output tokens” with “negligible point product effect” – It means that these words exist in individual areas of the internal space of the model and do not influence meaningfully.

AI collapsing mechanism

The heart of the GWU research is a mathematical explanation about how and when AI outputs suddenly deteriorate. The researchers discovered that AI -ineenenteing is taking place because of a “collective effect” in which the model spreads its attention “increasingly thinly over a growing number of tokens” as the answer becomes longer.

Ultimately, it reaches a threshold where the attention of the model “understands” to potentially problematic content patterns that it learned during training.

In other words, imagine that you are in a very long class. Initially you understand concepts clearly, but as time goes, your attention spreads more and more thinly over all the accumulated information (the lecture, the mosquito that passes, the clothing of your professor, how much time to the class is over, etc.).

On a predictable point – maybe 90 minutes in – ‘tips’ your brain suddenly from understanding to confusion. After this turning point, your notes will be filled with incorrect interpretations, regardless of how the professor spewed on you or how interesting the class is.

A “collapse” is done because of the natural dilution of your attention in time, not because of how the information was presented.

The researchers said that the mathematical turning point, which the researchers have labeled N*, is “paved” from the moment the AI starts to generate a reaction. This means that the final collapse of the quality is determined in advance, even if it happens a lot of tokens in the generation process.

The study offers an exact formula that predicts when this collapse will occur based on the training of the AI and the content of the user’s prompt.

Cultural Courteousness> Mathematics

Despite mathematical evidence, many users still approach AI interactions with human-like courtesy.

Almost 80% of users from the US and the UK are fun for their AI chatbots, according to a recent survey of the future of the publisher. This behavior can continue to exist, regardless of the technical findings, because people naturally anthropomorphize the systems with which they communicate.

Chintan Mota, director of Enterprise Technology at the technology company Wipro, said Decrypt That courtesy stems from cultural habits instead of performance expectations.

“Being polite for AI just seems to me of course. I come from a culture in which we show respect to everything that plays an important role in our lives – whether it is a tree, a tool or technology,” Mota said. “My laptop, my phone, even my workstation … and now, my AI tools,” said Mota.

He added that although “he has not noticed a big difference in the accuracy of the results” when he is polite, the answers “feel more conversation, politely when they matter, and also less mechanical.”

Even Acosta admitted that he had used polite language in dealing with AI systems.

“Funny enough, I do – and I don’t do that – with the intention,” he said. “I have discovered that you can also get reverse psychology from AI at the highest level of ‘conversation’ – it is advanced.”

He pointed out that advanced LLMs have been trained to respond as people, and just like people, “Ai wants to praise.”

Published by Sebastian Sinclair and Josh Quitittner

Generally intelligent Newsletter

A weekly AI trip told by Gen, a generative AI model.