If you’re not a developer, why on earth would you want to run an open-source AI model on your home computer?

It turns out there are some good reasons. And with free, open-source models becoming better than ever (and easy to use, with minimal hardware requirements) it’s a good time to give it a try.

Here are a few reasons why open source models are better than paying $20 a month to ChatGPT, Perplexity or Google:

- It’s free. No subscription fees.

- Your data remains on your machine.

- It works offline, no internet required.

- You can train and customize your model for specific use cases, like creative writing or… well, whatever.

The entry barrier has collapsed. Now there are specialized programs that allow users to experiment with AI without the hassle of independently installing libraries, dependencies and plugins. Almost anyone with a reasonably recent computer can do it: a mid-range laptop or desktop with 8 GB of video memory can run surprisingly capable models, and some models run on 6 GB or even 4 GB of VRAM. And for Apple, any M-series chip (from recent years) will be able to run optimized models.

The software is free, installation takes minutes, and the most intimidating step—choosing which tool to use—comes down to a simple question: Do you prefer clicking buttons or typing commands?

LM Studio vs. Ollama

Two platforms dominate the local AI space and approach the problem from opposite angles.

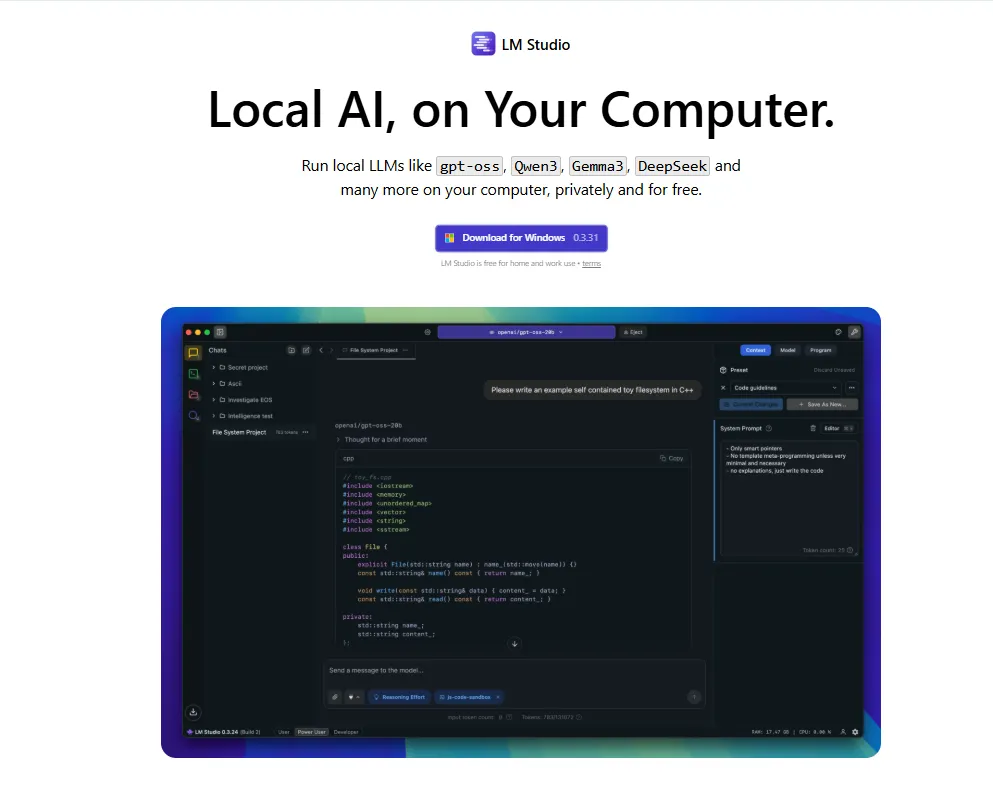

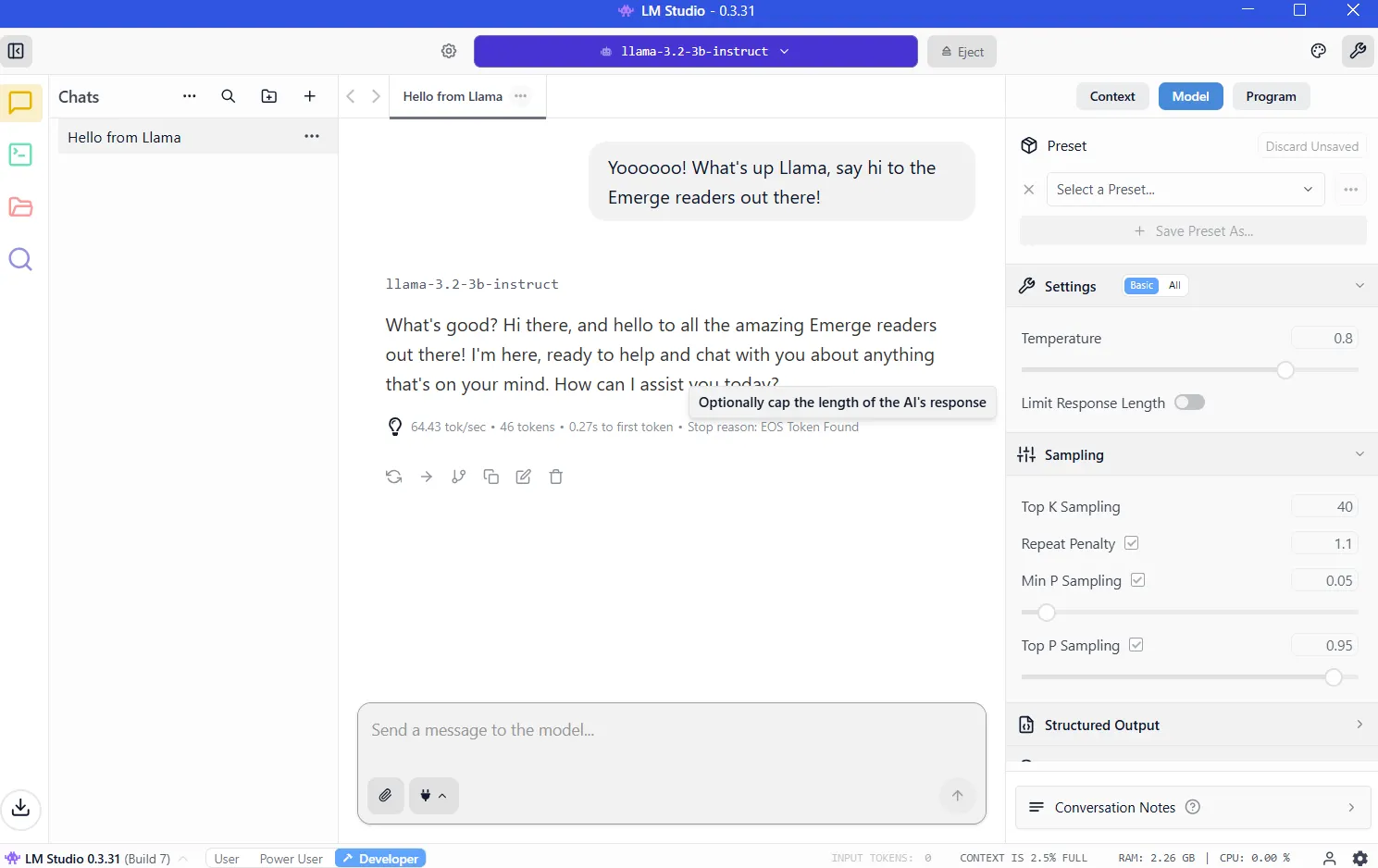

LM Studio packs everything into a polished graphical interface. You can simply download the app, browse a built-in model library, click to install and start chatting. The experience mirrors using ChatGPT, except the processing takes place on your hardware. Windows, Mac and Linux users get the same smooth experience. For newcomers, this is the obvious starting point.

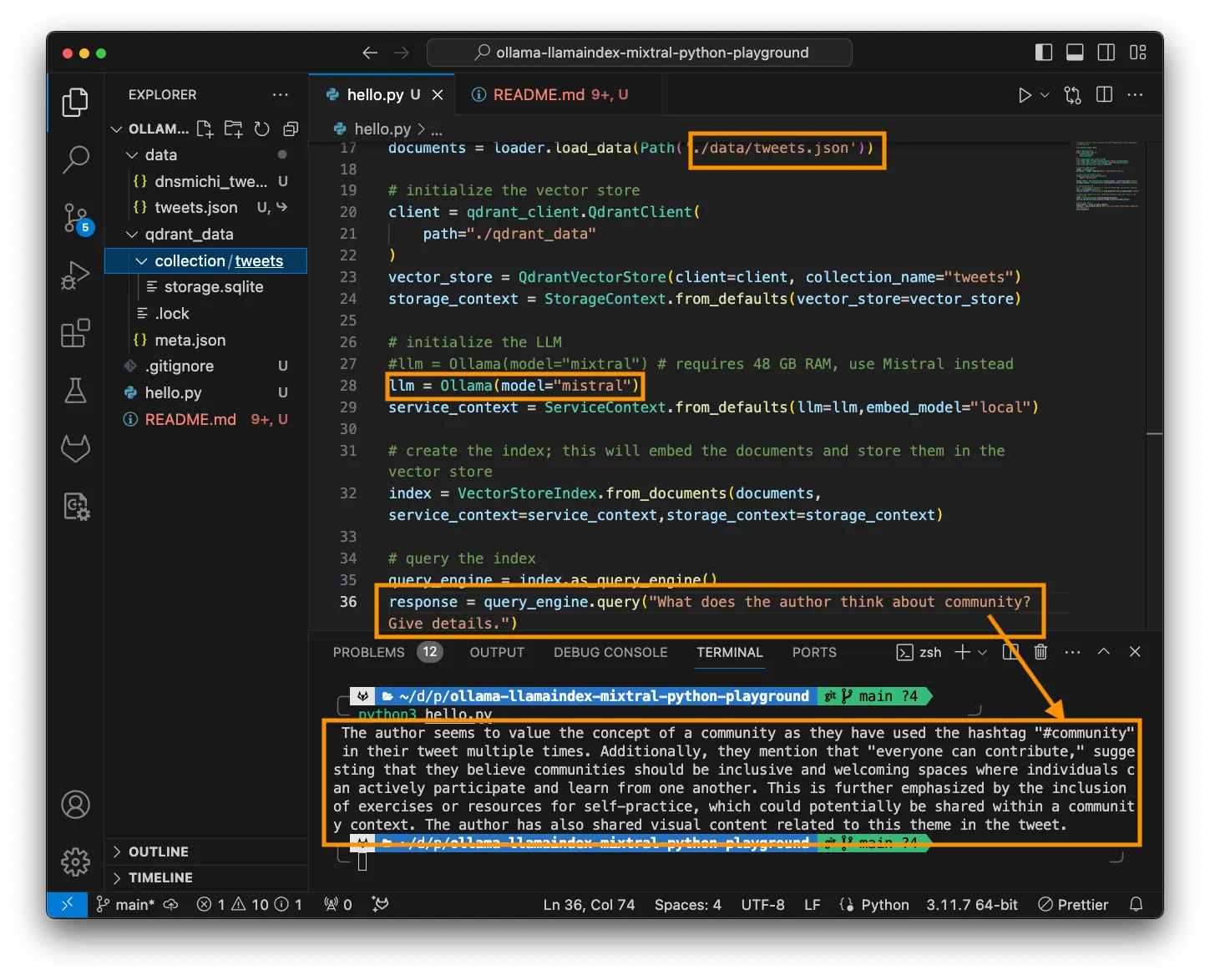

Ollama is aimed at developers and power users who live in the terminal. Install from the command line, retrieve models with a single command and script, or then automate to your heart’s content. It is lightweight, fast, and integrates neatly into programming workflows.

The learning curve is steeper, but the reward is flexibility. It is also what power users choose for versatility and customizability.

Both tools run the same underlying models using identical optimization engines. Performance differences are negligible.

Set up LM Studio

Go to https://lmstudio.ai/ and download the installer for your operating system. The file weighs approximately 540 MB. Run the installer and follow the prompts. Start the application.

Tip 1: When asked what type of user you are, choose “developer.” The other profiles simply hide options to make things easier.

Tip 2: It will recommend to download OSS, the open-source AI model from OpenAI. Instead, click “skip” for now; there are better, smaller models that will do a better job.

VRAM: the key to running local AI

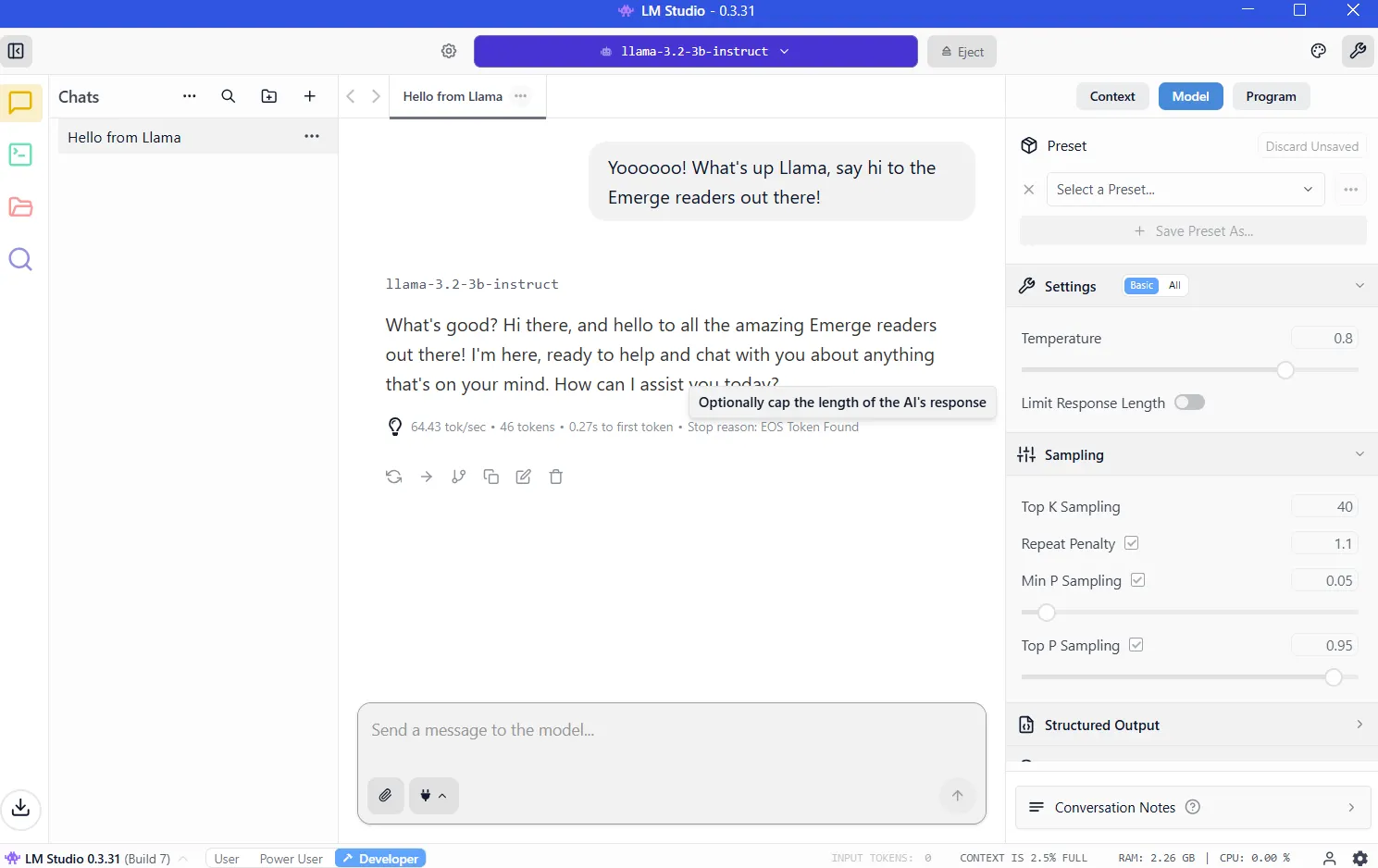

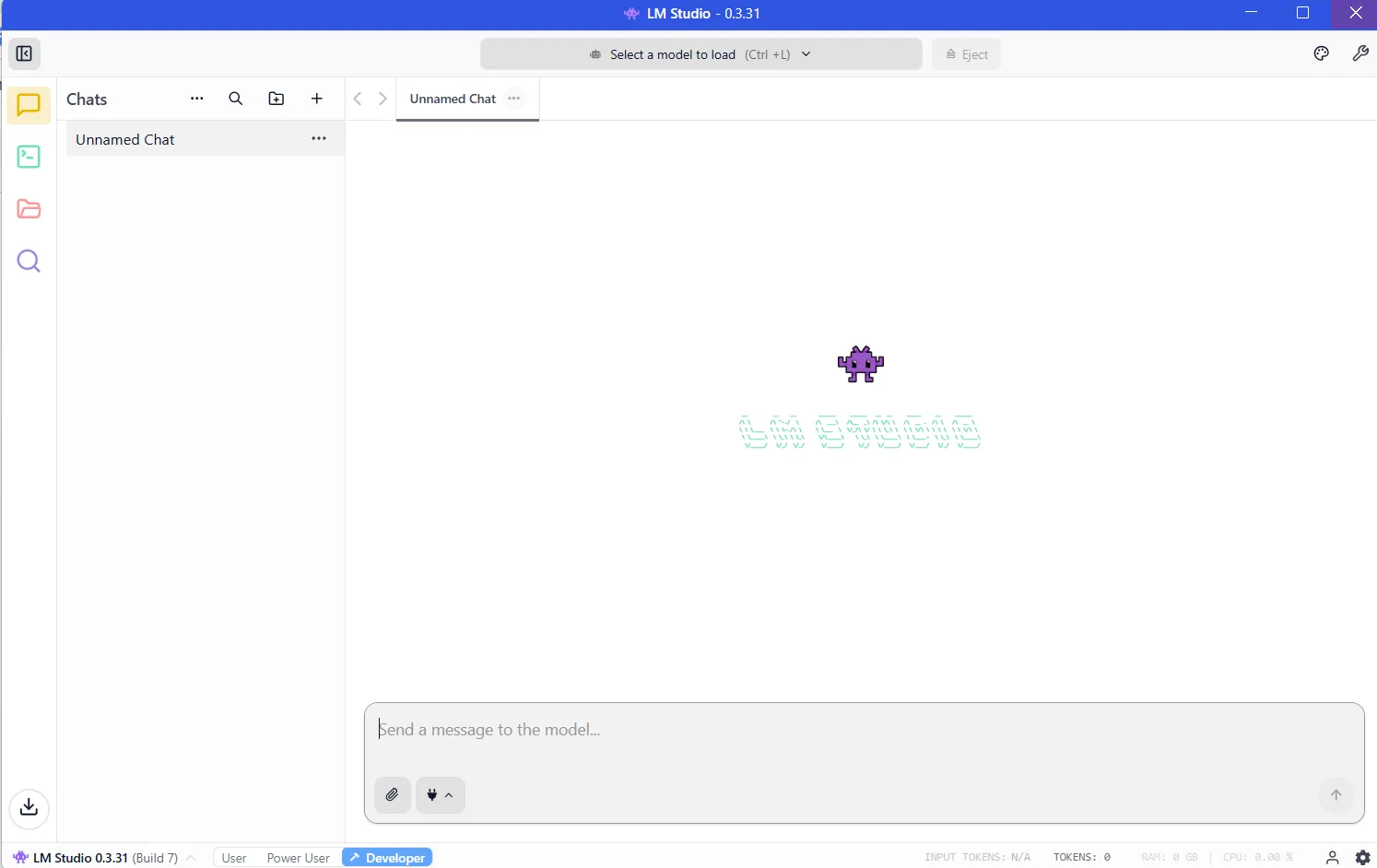

Once you have installed LM Studio, the program will be ready to use and will look like this:

Now you need to download a model for your LLM to work. And the more powerful the model, the more resources it needs.

The critical resource is VRAM, or video memory on your graphics card. LLMs are loaded into VRAM during inference. If you don’t have enough space, performance drops and the system has to resort to slower system RAM. You want to avoid this by having enough VRAM for the model you want to use.

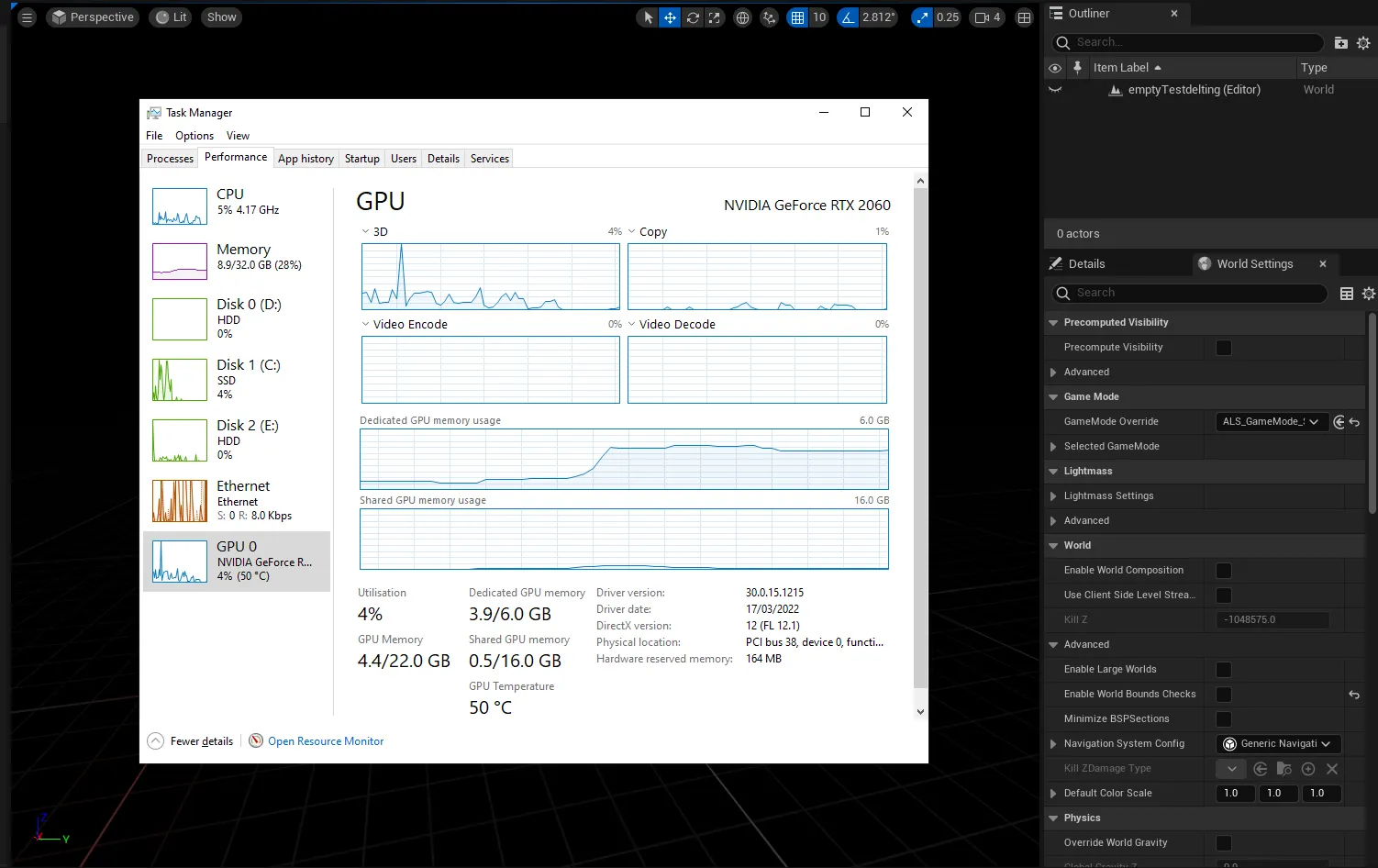

To know how much VRAM you have, you can open Windows Task Manager (control+alt+del) and click on the GPU tab. Make sure you have selected the dedicated graphics card and not the integrated graphics card on your Intel/AMD processor.

You can see how much VRAM you have in the ‘Dedicated GPU memory’ section.

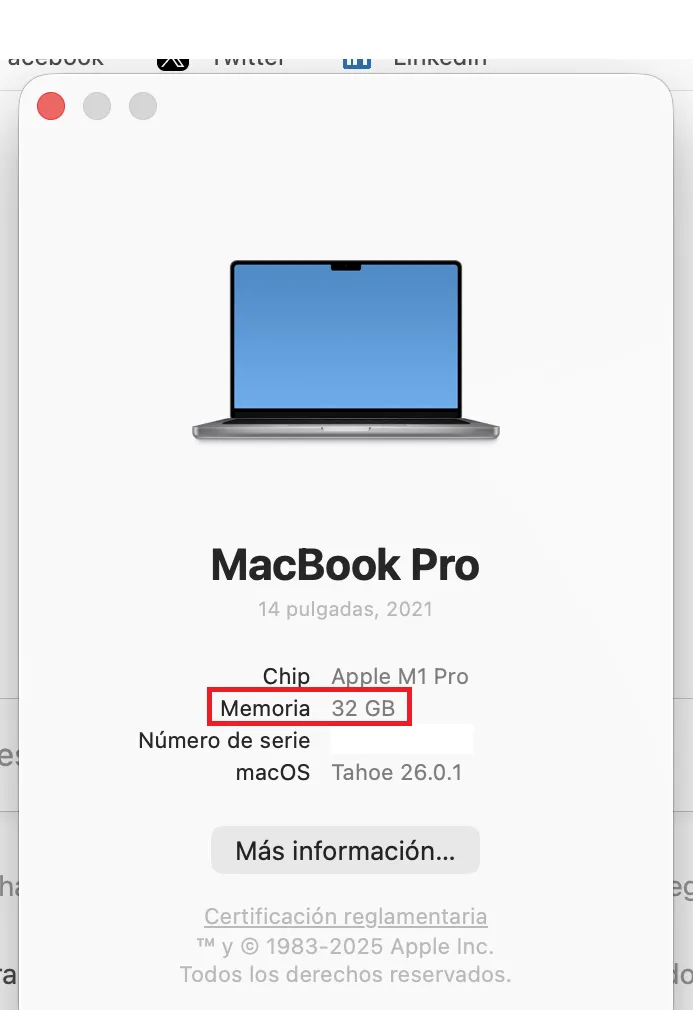

On M-series Macs, things are simpler because they share RAM and VRAM. The amount of RAM on your machine is equal to the VRAM you have access to.

To check this, click on the Apple logo and then click ‘About’. See memory? That’s how much VRAM you have.

You need at least 8 GB of VRAM. Models in the range of 7-9 billion parameters, compressed using 4-bit quantization, fit comfortably and deliver powerful performance. You’ll know if a model is quantized because developers usually mention it in the name. If you see BF, FP or GGUF in the name, you are looking at a quantized model. The lower the number (FP32, FP16, FP8, FP4), the less resources it will consume.

It’s not a matter of apples to apples, but think of quantization as the resolution of your screen. You see the same image in 8K, 4K, 1080p or 720p. You’ll be able to understand everything regardless of resolution, but if you zoom in and get picky with the details, you’ll see that a 4K image contains more information than a 720p, but requires more memory and resources to display.

But if you’re really serious about it, you should ideally buy a nice gaming GPU with 24 GB of VRAM. It doesn’t matter if it’s new or not, and it doesn’t matter how fast or powerful it is. In the land of AI, VRAM is king.

Once you know how much VRAM you can use, you can find out which models you can use by going to the VRAM calculator. Or just start with smaller models with less than 4 billion parameters and then move on to larger models until your computer tells you you don’t have enough memory. (More about this technique later.)

Download your models

Once you know the limits of your hardware, it’s time to download a model. Click the magnifying glass icon in the left sidebar and search for the model by name.

Qwen and DeepSeek are good models to start your journey. Yes, they’re Chinese, but if you’re worried about being spied on, rest assured. When you run your LLM locally, nothing leaves your machine, so you won’t be spied on by the Chinese, US government or other corporate entities.

As far as viruses go, everything we recommend comes through Hugging Face, where software is immediately checked for spyware and other malware. But for what it’s worth, the best American model is Meta’s Llama, so you might want to choose that if you’re a patriot. (We provide other recommendations in the final section.)

Keep in mind that models behave differently depending on the training dataset and the refinement techniques used to build them. Elon Musk’s Grok notwithstanding, there is no such thing as an unbiased model, because there is no such thing as unbiased information. So choose your poison depending on how much you care about geopolitics.

For now, download both the 3B version (smaller, less capable model) and the 7B version. If you can use the 7B, remove the 3B (and try downloading and running the 13B version, etc.). If you cannot use the 7B version, uninstall it and use the 3B version.

Once downloaded, load the model from the My Models section. The chat interface appears. Type a message. The model responds. Congratulations: you’re running a local AI.

Give your model internet access

By default, local models cannot browse the Internet. They are isolated by design, so you will work with them based on their internal knowledge. They work great for writing short stories, answering questions, coding, etc. But they won’t give you the latest news, tell you the weather, check your email, or schedule meetings for you.

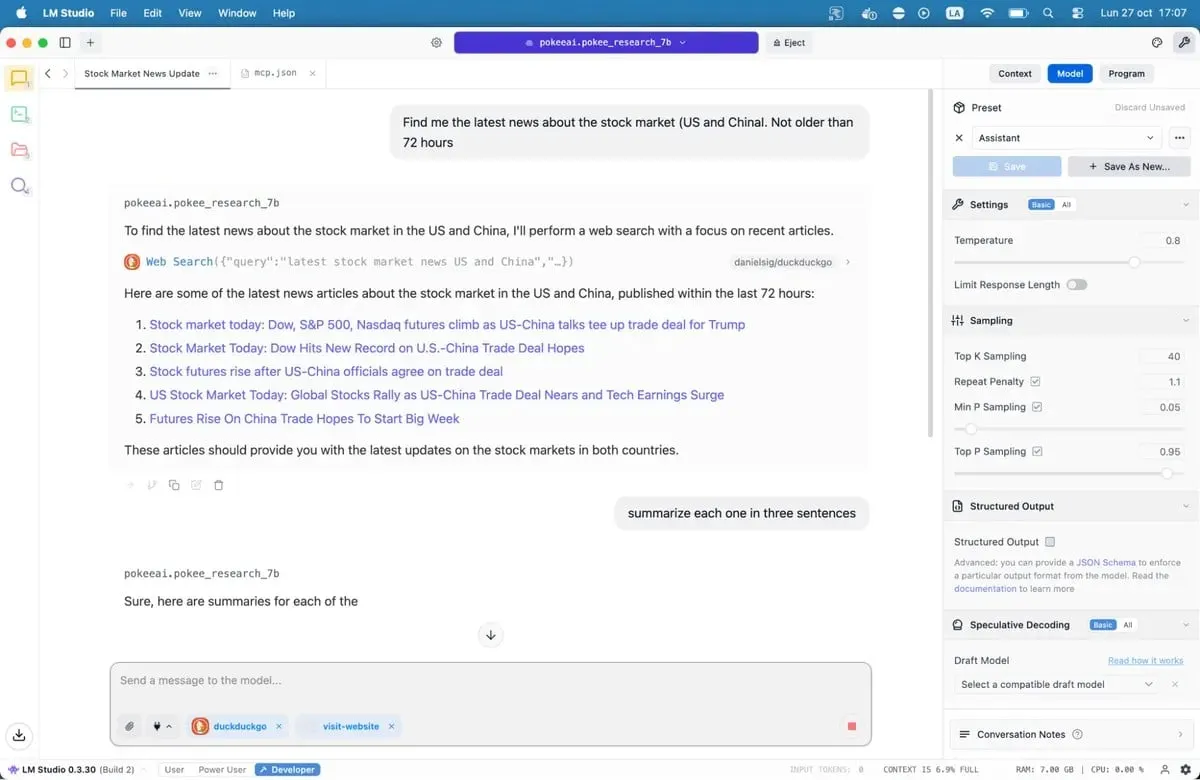

Model Context Protocol servers change this.

MCP servers act as bridges between your model and external services. Do you want your AI to search Google, check GitHub repositories, or read websites? MCP servers make it possible. LM Studio added MCP support in version 0.3.17, accessible from the Program tab. Each server has specific tools: web search, file access, API calls.

If you want to give models access to the Internet, our complete guide to MCP servers walks through the installation process, including popular options like Internet searching and database access.

Save the file and LM Studio will automatically load the servers. When you chat with your model, it can now call these tools to retrieve live data. Your local AI just got super powers.

Our recommended models for 8GB systems

There are literally hundreds of LLMs available to you, from jack-of-all-trades options to sophisticated models designed for specialized use cases such as coding, medicine, role-playing, or creative writing.

Best for Coding: Nemotron or DeepSeek are good. They won’t blow your mind, but they work fine at code generation and debugging, and outperform most alternatives in programming benchmarks. DeepSeek-Coder-V2 6.7B offers another solid option, especially for multilingual development.

Best for general knowledge and reasoning: Qwen3 8B. The model has strong mathematical capabilities and processes complex queries effectively. The context window can accommodate longer documents without losing coherence.

Best for Creative Writing: DeepSeek R1 variants, but you need some quick, fast engineering. There are also uncensored refinements like the “abliterated-uncensored-NEO-Imatrix” version of OpenAI’s GPT-OSS, which is good for horror; or Dirty-Muse-Writer, which is good for erotica (or so they say).

Best for chatbots, role playing, interactive fiction, customer service: Mistral 7B (especially Undi95 DPO Mistral 7B) and Llama variants with large context windows. MythoMax L2 13B retains character traits during long conversations and adjusts the tone naturally. For other NSFW roleplay, there are many options. You may want to check out some of the models on this list.

For MCP: Jan-v1-4b and Pokee Research 7b are nice models if you want to try something new. DeepSeek R1 is another good option.

All models can be downloaded directly from LM Studio if you search only by their names.

Note that the open-source LLM landscape is rapidly changing. New models are launched every week, all claiming improvements. You can view them in LM Studio, or browse the different repositories on Hugging Face. Test the options yourself. Bad matches quickly become apparent due to clumsy wording, repetitive patterns, and factual errors. Good models feel different. They reason. They surprise you.

The technology works. The software is ready. Your computer probably already has enough power. All that remains is to try.

Generally intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.