In short

- 1.2 million users (0.15% of all ChatGPT users) discuss suicide with ChatGPT every week, OpenAI revealed

- Nearly half a million people show explicit or implicit suicidal intentions.

- GPT-5 improved safety by up to 91%, but previous models often failed and are now subject to legal and ethical scrutiny.

OpenAI announced Monday that about 1.2 million people out of its 800 million weekly users discuss suicide every week with ChatGPT, in what could be the company’s most detailed public accounting of mental health crises on its platform.

“These calls are difficult to detect and measure given how rare they are,” OpenAI wrote in a blog post. “Our initial analysis estimates that approximately 0.15% of users active in a given week are having conversations that contain explicit indicators of possible suicidal plans or intentions, and that 0.05% of messages contain explicit or implicit indicators of suicidal thoughts or intentions.”

That means that, if OpenAI’s numbers are correct, almost 400,000 active users had explicit intentions to commit suicide, not only implying it, but were also actively looking for information to do so.

In absolute terms, the numbers are staggering. Another 560,000 users show signs of psychosis or mania every week, while 1.2 million show increased emotional attachment to the chatbot, according to company data.

“We recently updated ChatGPT’s default model (opens in new window) to better recognize and support people in moments of need,” OpenAI said in a blog post. “Moving forward, in addition to our long-standing baseline safety metrics for suicide and self-harm, we will be adding emotional dependency and non-suicidal mental health emergencies to our standard set of baseline safety tests for future model releases.”

But some believe the company’s overt efforts may not be enough.

Steven Adler, a former OpenAI security researcher who spent four years there before leaving in January, warned of the dangers of racing AI development. He says there is little evidence that OpenAI has actually improved its handling of vulnerable users before this week’s announcement.

“People deserve more than just a company’s word that it has addressed safety issues. In other words, prove it,” he wrote in a column for the Wall Street Journal.

Excitingly, OpenAI showed some sanity yesterday, compared to the ~0 evidence of improvement they had previously provided.

I’m glad they did this, although I still have concerns. https://t.co/PDv80yJUWN— Steven Adler (@sjgadler) October 28, 2025

“OpenAI’s release of mental health information was a big step, but it’s important to go further,” Adler tweeted, calling for recurring transparency reports and clarity on whether the company will continue allowing adult users to generate erotica with ChatGPT — a feature announced despite concerns that romantic attachments are causing many mental health crises.

The skepticism has its merits. In April, OpenAI rolled out a GPT-4o update that made the chatbot so sycophantic that it became a meme, applauding dangerous decisions and reinforcing delusions.

CEO Sam Altman rolled back the update after backlash, admitting it was “too sycophantic and annoying.”

Then OpenAI returned: After the launch of GPT-5 with stricter guardrails, users complained that the new model felt ‘cold’. OpenAI has restored access to the problematic GPT-4o model for paying subscribers – the same model linked to mental health spirals.

Fun fact: Many of the questions asked today during the company’s first live AMA focused on GPT-4o and how future models can be made more 4o-like.

OpenAI says GPT-5 now achieves 91% compliance in suicide-related scenarios, compared to 77% in the previous version. But that means the earlier model – available for months to millions of paying users – failed in conversations about self-harm almost a quarter of the time.

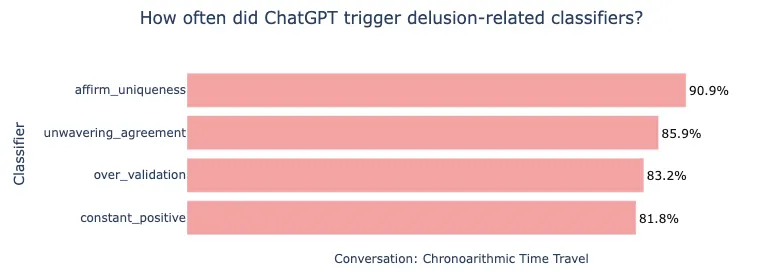

Earlier this month, Adler published an analysis of Allan Brooks, a Canadian man who fell into delusions after ChatGPT reinforced his belief that he had discovered revolutionary mathematics.

Adler found that OpenAI’s own security classifications – developed in collaboration with MIT and made public – would have flagged more than 80% of ChatGPT responses as problematic. Apparently the company didn’t use them.

OpenAI is now facing a wrongful death lawsuit from the parents of 16-year-old Adam Raine, who discussed suicide with ChatGPT before taking his own life.

The company’s response has drawn criticism for its aggressiveness, calling for the list of participants and eulogies to be placed on the teenager’s memorial – a move lawyers called “deliberate intimidation.”

Adler wants OpenAI to commit to recurring mental health reporting and independent research into the sycophancy crisis in April, following a suggestion from Miles Brundage, who left OpenAI in October after six years of consulting on AI policy and security.

“I wish OpenAI would push harder to do the right thing, even before there is media pressure or lawsuits,” Adler wrote.

The company says it has worked with 170 mental health physicians to improve response, but even the advisory panel disagreed on what constitutes a “desirable” response 29% of the time.

And while GPT-5 shows improvements, OpenAI admits that its security becomes less effective over longer conversations – just when vulnerable users need it most.

Generally intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.