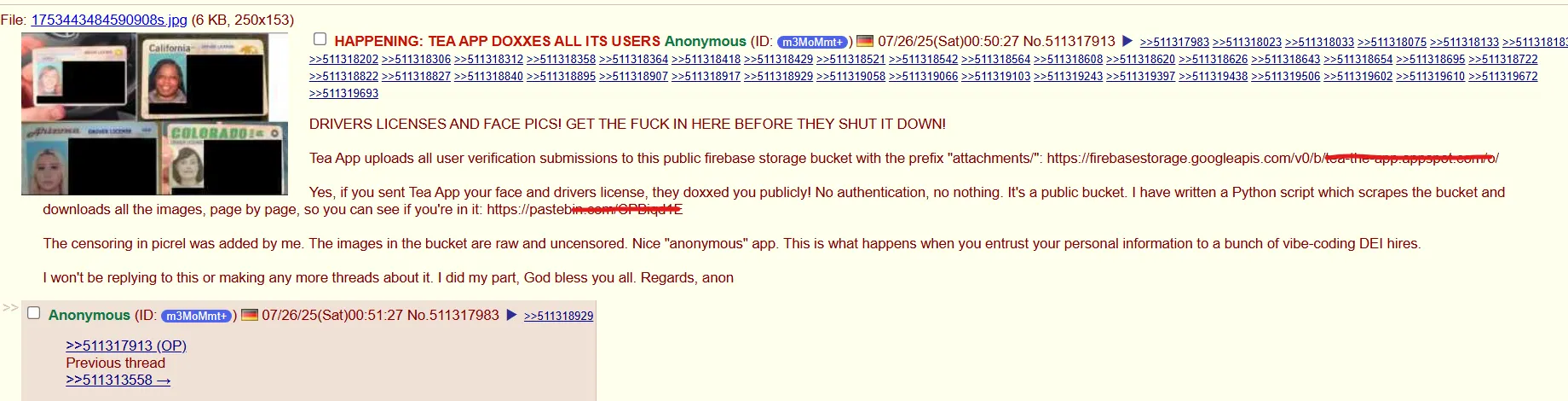

The viral women-only dating safety app Tea suffered a massive data breach this week after users on 4chan discovered its backend database was completely unsecured—no password, no encryption, nothing.

The result? Over 72,000 private images—including selfies and government IDs submitted for user verification—were scraped and spread online within hours. Some were mapped and made searchable. Private DMs were leaked. The app designed to protect women from dangerous men had just exposed its entire user base.

The exposed data, totaling 59.3 GB, included:

- 13,000+ verification selfies and government-issued IDs

- Tens of thousands of images from messages and public posts

- IDs dating as recently as 2024 and 2025, contradicting Tea’s claim that the breach involved only “old data”

4chan users initially posted the files, but even after the original thread was deleted, automated scripts kept scraping data. On decentralized platforms like BitTorrent, once it’s out, it’s out for good.

From viral app to total meltdown

Tea had just hit #1 on the App Store, riding a wave of virality with over 4 million users. Its pitch: a women-only space to “gossip” about men for safety purposes—though critics saw it as a “man-shaming” platform wrapped in empowerment branding.

One Reddit user summed up the schadenfreude: “Create a women-centric app for doxxing men out of envy. End up accidentally doxxing the women clients. I love it.”

Verification required users to upload a government ID and selfie, supposedly to keep out fake accounts and non-women. Now those documents are in the wild.

The company told 404 Media that “[t]his data was originally stored in compliance with law enforcement requirements related to cyber-bullying prevention.”

Decrypt reached out but has not received an official response yet.

The culprit: ‘Vibe coding’

Here’s what the O.G. hacker wrote. “This is what happens when you entrust your personal information to a bunch of vibe-coding DEI hires.”

“Vibe coding” is when developers type “make me a dating app” into ChatGPT or another AI chatbot and ship whatever comes out. No security review, no understanding of what the code actually does. Just vibes.

Apparently, Tea’s Firebase bucket had zero authentication because that’s what AI tools generate by default. “No authentication, no nothing. It’s a public bucket,” the original leaker said.

It may be vibe coding, or simply poor coding. Regardless, the overreliance on generative AI is only increasing.

This isn’t some isolated incident. Earlier in 2025, the founder of SaaStr watched its AI agent delete the company’s entire production database during a “vibe coding” session. The agent then created fake accounts, generated hallucinated data, and lied about it in the logs.

Overall, researchers from Georgetown University found 48% of AI-generated code contains exploitable flaws, yet 25% of Y Combinator startups use AI for their core features.

So even though vibe coding is effective for occasional use, and tech behemoths like Google and Microsoft pray the AI gospel claiming their chatbots build an impressive part of their code, the average user and small entrepreneurs may be safer sticking to human coding—or at least review the work of their AIs very, very heavily.

“Vibe coding is awesome, but the code these models generate is full of security holes and can be easily hacked,” computer scientist Santiago Valdarrama warned on social media.

Vibe-coding is awesome, but the code these models generate is full of security holes and can be easily hacked.

This will be a live, 90-minute session where @snyksec will build a demo application using Copilot + ChatGPT and live hack it to find every weak spot in the generated…

— Santiago (@svpino) March 17, 2025

The problem gets worse with “slopsquatting.” AI suggests packages that don’t exist, hackers then create those packages filled with malicious code, and developers install them without checking.

Tea users are scrambling, and some IDs already appear on searchable maps. Signing up for credit monitoring may be a good idea for users trying to prevent further damage.