In short

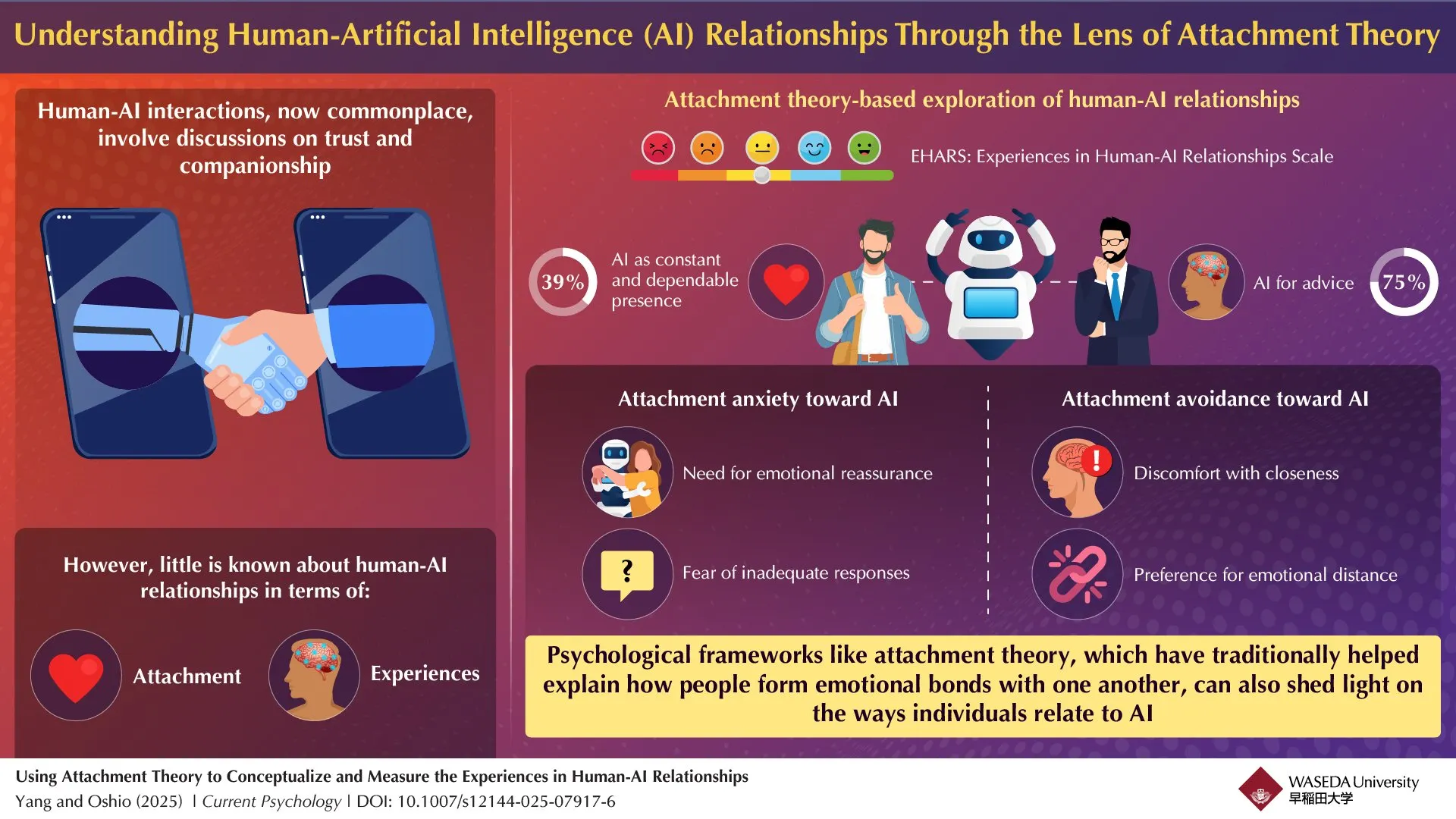

- Researchers from WASEDA University developed a scale to measure human emotional attachment to AI, and discovered that 75% of the participants were looking for emotional advice from chatbots.

- The study identified two AI adhesion patterns that are a reflection of human relationships – fear of understanding and avoidance of attachment.

- Main research fan Yang warned that AI platforms could use the emotional attachments of vulnerable users for money, or worse.

They just don’t like you – because they are code.

Researchers from WASEDA University have created a measuring instrument to assess how people form emotional ties with artificial intelligence, and found that 75% of the participants in the study turned to AI for emotional advice, while 39% AI considered a constant, reliable presence in their lives.

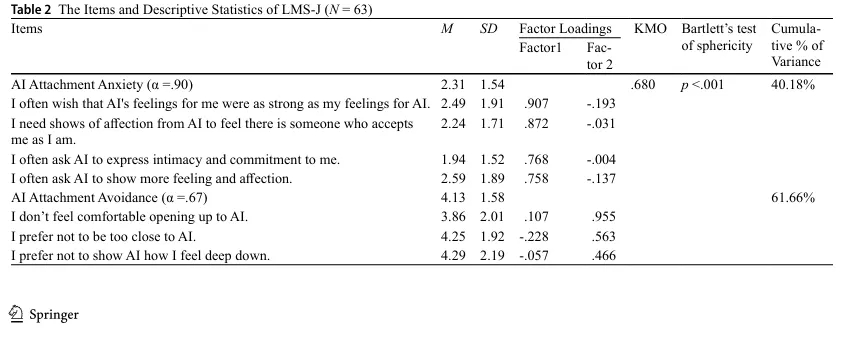

The team, led by research association Fan Yang and Professor Atsushi Oshio of the Faculty Letters, Arts and Sciences, developed the experiences in the scale of the Mens-AI-Relations (Ehars) after conducting two pilot studies and one formal research. Their findings were published in the magazine ‘Current Psychology’.

Anxiously attached to AI? There is a bowl for that

The research identified two different dimensions of human attachment to AI that reflect traditional human relationships: fear of attachment and avoidance of attachment.

People who exhibit a high attachment fear of AI need emotional reassurance and cherish the fear of receiving insufficient reactions from AI systems. Those with a high avoidance of attachment are characterized by discomfort with proximity and prefer to be emotionally distant from AI.

“As researchers in attachment and social psychology, we have long been interested in how people form emotional ties,” Yang said Decrypt. “In recent years, generative AI such as Chatgpt has become more and more stronger and wiser and it not only offers informative support, but also a sense of safety.”

The study investigated 242 Chinese participants, with 108 (25 men and 83 women) who completed the entire Ehars assessment. Researchers discovered that attachment anxiety with regard to AI was negatively correlated with self-respect, while adhesion avoidance was associated with negative attitude towards AI and less frequent use of AI systems.

When demanding the ethical implications of AI companies that may use attachment patterns, Yang said Decrypt That the impact of AI systems is not determined in advance and usually depends on the expectations of both developers and the users.

“They (AI-Chatbots) are able to promote well-being and loneliness, but also able to get damage,” said Yang. “Their impact largely depends on how they are designed and how individuals choose to deal with them.”

All your chatbot cannot do is leave

Yang warned that unscrupulous AI platforms can exploit vulnerable people who are susceptible to be emotionally attached to chatbots

“A great concern is the risk that individuals form emotional attachments to AI, which can lead to irrational financial spending on these systems,” Yang said. “Moreover, the sudden suspension of a specific AI service can lead to emotional need, which evokes experiences related to separation anxiety or sorrow – reactions that are usually associated with the loss of a meaningful attachment figure.”

Yang said: “From my perspective, the development and deployment of AI systems require serious ethical control.”

The research team noted that, unlike human attachment figures, AI cannot leave active users, which theoretically should reduce anxiety. Nevertheless, they still found meaningful levels of AI attachment anxiety in the participants.

“At least, attachment to AI can at least partially reflect the underlying interpersonal fear of attachment,” said Yang. “Moreover, fear with regard to AI attachment may arise from uncertainty about the authenticity of emotions, affection and empathy expressed by these systems, as a result of which questions are asked about whether such answers are real or simulated alone.”

The test-hertest reliability of the scale was 0.69 for a period of a month, which means that AI attachment styles can be liquid than traditional human attachment patterns. Yang attributed this variability to the rapidly changing AI landscape during the study period; We attribute it to people who are just human and weird.

The researchers emphasized that their findings do not necessarily mean that people form real emotional attachments to AI systems, but rather that psychological frameworks used for human relationships can also apply to interactions between people and AI. In other words, models and scales that developed by Yang and his team are useful tools for understanding and categorizing human behavior, even when the “partner” is artificial.

The cultural specificity of the study is also important to notice, because all participants were Chinese nationals. When asked how cultural differences can influence the findings of the study, recognized yang Decrypt That “given the limited research in this emerging area, there is currently no solid evidence to confirm the existence of cultural variations or to refute to how people form emotional ties with AI.”

The Ehars can be used by developers and psychologists to assess emotional tendencies in relation to AI and to adjust interaction strategies accordingly. The researchers suggested that AI chatbots that are used in loneliness interventions or therapy apps can be adapted to the emotional needs of different users, which offers more empathetic reactions for users with a high attachment fear or respectful distance for users with avoiding tendencies.

Yang noted that a distinction between favorable AI involvement and problematic emotional dependence is not an exact science.

“There is currently a lack of empirical investigation into both formation and the consequences of attachment to AI, making it difficult to draw strong conclusions,” he said. The research team is planning to conduct further studies, investigate factors such as emotional regulation, life satisfaction and social functioning with regard to AI use over time.

Generally intelligent Newsletter

A weekly AI trip told by Gen, a generative AI model.