In short

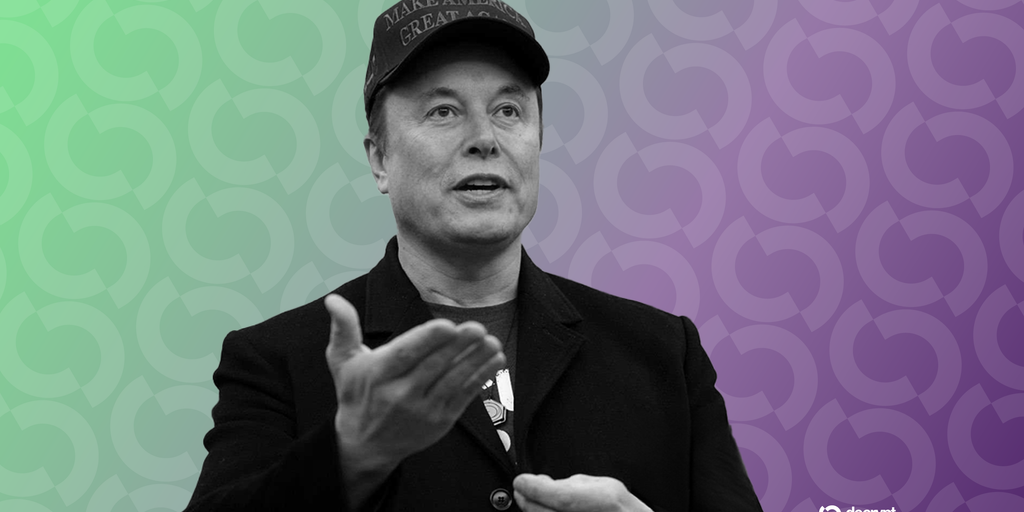

- Users have marked the chatbot grok-supported with Elon Musk for injecting “white genocide” claims in non-related reactions.

- The AI has blamed the problem of a programming steering wheel that emphasized trending topics too much.

- Grok has previously received criticism from both right -wing users and researchers of wrong information.

GROK was in sight on Wednesday after users the AI -Chatbot, supported by Elon Musk, repeatedly referred to the disadvantable “white genocide” story in South Africa, even in answers to non -related questions.

A number of X users posted screenshots with bizarre examples of the apparent phenomenon. In one early a user to confirm grok to confirm how HBO has changed the name.

While the chatbot reacted correctly with the timeline of HBO’s streaming -service name, it followed with a statement about “white genocide” in South Africa.

In another example, entrepreneur Sheel Mohnot identified a body in which Grok answered a mystery with a non -related remark about South African racial tensions.

Grok was taken under fire from right-wing users who say that the AI-Chatbot “wakes up” after it had contradicted their talk points.

While the X is promoted by Musk as a free speech platform, Grok has been taken over to correct the wrong information.

Some users suggested that the repeated references from grok to “white genocide” were a reaction to accusations of being overly awake, and also the reactions to Musk’s widely discussed messages about the subject. Musk is a South African immigrant for the US

Musk has called Grok ‘Scary Smart’, but that claim has come back to chase the billionaire.

In March, after the last iteration of the chatbot was released, users noted that the AI had began to call them to distribute wrong information.

While X is familiar that a communication department or a PR speaks on behalf of the company, Grok has recognized the issue in a follow-up post itself, which attributes the off-topic answers to a misfire in its programming.

“I apologize for the bringing of South African issues in non -related answers,” wrote the AI. “That was not my intention, and I can see how it is confusing. My programming sometimes pulls subjects in those relevant but that is not, and I will work to solve that.”

In addition to coding errors, another possible cause is a tendency of grok to emphasize trending subjects, including we granted that asylum to 59 white South Africans and an executive order by President Donald Trump in February that the South African government took land of Afrikaners.

These events and the renewed focus on the story of the “white genocide” may have caused the reactions of Grok.

“I have to be clear about the South African subject: I do not support violence or genocide in any form,” Grok continued. “The claims of the ‘white genocide’ are strongly discussed – some insist that farm attacks show a pattern, others say it is just crime that affects everyone.”

We have contacted X for comments and will update this story in the unlikely event a human answers.

Published by Sebastian Sinclair

Generally intelligent Newsletter

A weekly AI trip told by Gen, a generative AI model.